The AWS Database Blog offers valuable insights and updates on database services provided by Amazon Web Services. This platform shares best practices tips and new features to help users effectively manage their databases. Whether you are a beginner or an expert this blog aims to

enhance your understanding of AWS database solutions and how they can support your projects. — Let me know if you need further assistance!

“Amazon ElastiCache for Redis 7 has a new feature that allows you to achieve up to 72% higher performance through improved I/O multiplexing.”

Written by Mickey Hoter and Ohad Shacham on February 8 2023 in Amazon ElastiCache

Intermediate level (200). You can find the link here and feel free to leave comments or share.

Amazon ElastiCache for Redis is a fast data storage service that keeps information in memory. It offers quick and affordable performance for today’s applications. It is a completely managed service that can handle millions of operations every second responding in just a few microseconds.

Open-source Redis also known as Redis OSS is a highly popular NoSQL key-value store that is

recognized for its excellent performance. Customers opt to use caching with ElastiCache for Redis to lower database expenses and enhance performance. As they expand their applications they

increasingly require a high level of performance and the ability to handle many connections at the same time. In March 2019 we launched improved input/output features that provided up to an 83% boost in data processing speed and reduced delays by up to 47% for each node. In November 2021 we enhanced the performance of TLS-enabled clusters by moving the encryption process to threads that do not use the Redis engine. In this post we are pleased to share that we have upgraded I/O multiplexing. This upgrade boosts both speed and response time and you won’t need to make any extra changes to your applications.

“Improved input/output multiplexing in ElastiCache for Redis 7″ :

ElastiCache for Redis version 7 and higher now features better input/output multiplexing. This upgrade leads to major improvements in speed and response time when handling a large amount of data. Improved input/output multiplexing works best for tasks that need high throughput and involve many client connections. Its advantages increase as the workload becomes more concurrent. For

instance when using an r6g. By using an xlarge node and managing 5 200 clients at the same time you can improve your throughput (the number of read and write operations per second) by as much as 72% and reduce the P99 latency by up to 71% when compared to ElastiCache for Redis 6. This is particularly beneficial for these types of workloads. “Processing can limit how much we can grow.” With improved input/output multiplexing each dedicated network input/output thread channels commands from several clients into the ElastiCache for Redis engine. This setup makes the most of ElastiCache for Redis’s capability to effectively handle commands in groups. Improved I/O

multiplexing is automatically included with ElastiCache for Redis 7 in every AWS Region and there are no extra charges for this feature. For additional details please refer to the ElastiCache user guide.

When you use ElastiCache for Redis version 7 you don’t need to make any changes to your application or service settings to take advantage of improved I/O multiplexing.

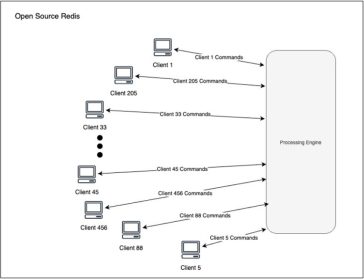

“Let’s take a closer look at how it functions.” The diagram below illustrates a broad overview of how Redis OSS manages client input and output extending to ElastiCache for Redis version 6.

The Redis OSS engine can support many clients at the same time. The engine runs on a single thread and its processing power is divided among the connected clients. The engine manages the

connection for each client by interpreting the request and executing the commands. In this situation the available CPUs are not being used.

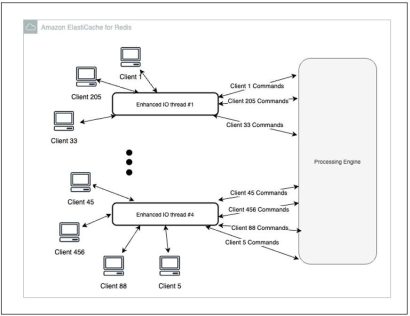

The following diagram illustrates ElastiCache for Redis with improved input/output features which was launched for Redis OSS version 5. Sure! Please provide the text you’d like me to paraphrase and I’ll be happy to help. “3) Available CPUs are used to handle client connections for the ElastiCache for Redis engine.” A comparable method was used in open-source Redis OSS 6 which introduced a

related idea known as I/O threads.

With improved input and output capabilities the engine’s processing power is dedicated to executing commands instead of managing network connections. As a result you can achieve up to an 83%

increase in output and a reduction in delay by as much as 47% for each node. With multiplexing

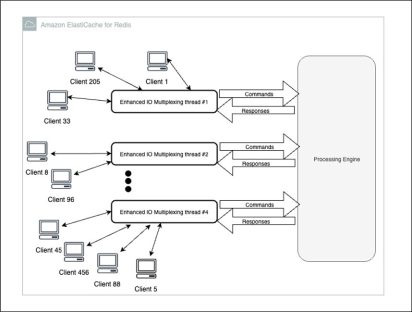

rather than creating a separate channel for each client each improved I/O thread merges the commands into one channel with the ElastiCache for Redis engine as illustrated in the diagram below.

In the previous illustration each improved I/O multiplexing thread manages several clients and each of these threads uses one channel to connect to the main ElastiCache for Redis engine thread. The

improved input/output thread gathers and combines client requests into one group which is then sent to the ElastiCache for Redis engine for processing. This method enables commands to be

executed more effectively leading to faster performance as we will show in the following section.

“Performance analysis” refers to the process of evaluating how well something is working. This might involve looking at data measuring results and identifying areas for improvement. It helps

organizations understand their strengths and weaknesses guiding them in making better decisions to enhance their overall effectiveness.

In this part we share the benchmark results for the memory-optimized R5 (x86) and R6g (ARM-

Graviton2) nodes. We selected a common caching situation that involved 20% SET (write) commands and 80% GET (read) commands. The dataset was set up with 3 million keys each 16 bytes long

paired with string values that are 512 bytes in size. Both ElastiCache and the client applications operated within the same Availability Zone.

“Throughput gain” refers to the increase in the amount of work or data processed over a specific period. It measures how much more efficiently a system or process can operate compared to its previous performance.

The chart below displays the results from benchmark tests that involved various levels of client activity specifically 400 clients 2 000 clients and 5 200 clients tested on different node sizes. The graph shows how much better throughput (requests per second) becomes with improved I/O

multiplexing.

With an increase of up to 72% in requests per second improved I/O multiplexing boosts overall performance as shown in the graph above. It’s important to understand that as the number of

clients using the system increases the level of improvement also rises. For instance r6g. The xlarge configuration demonstrates improvements in operations per second with increases of 26% 48% and 72% when handling 400 2 000 and 5 200 concurrent clients respectively. The table below shows the actual numbers that highlight the progress made.

“ElastiCache for Redis Version supports 400 , 2 000, 5,200 concurrent clients.”

ElastiCache for Redis Version | 400 Clients | 2,000 Clients | 5,200 Clients |

6.2.6 (ops/sec) | 313,143 | 235,562 | 202,770 |

7.05 (ops/sec) | 395,819 | 348,550 | 349,239 |

Improvement | 26% | 48% | 72% |

Sure! Please provide the text you would like me to paraphrase and I’ll be happy to help. Sure! Please provide the text you would like me to paraphrase and I’ll be happy to assist you. 6 operations per second: 313 143 235 562 202 770.

Of course! Please provide the text you would like me to paraphrase and I’ll be happy to help. 05 (operations per second): 395 819 348 550 349 239.

“Progress: 26% 48% 72%”

The multiplexing method enhances the processing efficiency of ElastiCache for Redis much like the pipeline feature in Redis OSS. Both Redis OSS pipelines and ElastiCache for Redis enhanced I/O

multiplexing handle commands in groups. While the Redis OSS pipeline batch consists of commands from just one client ElastiCache for Redis improves I/O multiplexing by including commands from several clients in one batch.

“Reducing delays on the user’s side”

Maximizing throughput is not advisable as it can lead to sudden increases in CPU usage and slower response times. To prevent that issue we measured the delay when processing 142 000 requests per second which used 70% of the capacity of ElastiCache for Redis 6. Sure please provide the text you would like me to paraphrase and I’ll be happy to help! “6 on r6g.” Could you please provide more context or additional text for me to paraphrase? The current phrase doesn’t contain enough

information to work with. Sure! Please provide the text that you would like me to paraphrase. We assessed the client-side latency using ElastiCache for Redis version 6 and compared it to the same workload on ElastiCache for Redis version 7. The table below displays the results of a test conducted on the r6g instance. Sure! Please provide the text you would like me to paraphrase. “It shows the

percentage decrease in latency for P50 and P99.”

.ElastiCache for Redis Version | P50 Latency | P99 Latency |

6.2.6 (ms) | 0.55 | 8.3 |

7.05 (ms) | 0.44 | 2.45 |

Latency Reduction | 20% | 71% |

Sure! Please provide the text you would like me to paraphrase. “ElastiCache for Redis Version – P50 Latency and P99 Latency”

Of course! Please provide the text you would like me to paraphrase and I will be happy to assist you. Sure! Please provide the text you’d like me to paraphrase and I’ll be happy to help. The text “6 (ms) 0.” appears to be a brief and specific piece of information possibly related to a measurement or a score. However without additional context it is difficult to paraphrase it meaningfully. Please

provide more details or clarify the context and I will be happy to assist you! Certainly! However it seems like the text you provided is incomplete or lacks context. If you can provide the specific text or passage you would like me to paraphrase I would be happy to help you with that. Please share the full content you’d like me to work on! Of course! Please provide the text you’d like me to paraphrase and I’ll be happy to help.

Sure! Please provide the text you would like me to paraphrase. Certainly! However it seems that your request contains a very brief piece of text that reads “05 (ms) 0.” This text does not provide enough context or content for paraphrasing. Could you please provide more text or clarify what you’d like me to paraphrase? It seems like your request is incomplete or unclear. Could you please

provide the specific text you would like me to paraphrase? This will help me assist you better. Thank you! Sure! Please provide the text you’d like me to paraphrase.

“Reducing latency by 20% results in a 71% improvement.”

Just like the increase in throughput the reduction in client-side latency is noticeable in the ElastiCache instances that use improved I/O multiplexing.

When ElastiCache handles more clients at the same time improved I/O multiplexing results in faster response times. Certainly! Here’s a paraphrase of the question “Why is that?” in a more professional tone yet still easy to understand: “What is the reason for that?”

Improved I/O multiplexing gathers commands from many clients sometimes thousands of them and combines them into a few channels. The number of these channels matches the number of

enhanced I/O threads. Reducing the number of channels makes it easier to access local data which in turn enhances the use of memory cache. For instance instead of giving each client their own

read/write buffer all clients use the same shared channel buffer. Expensive memory cache misses are minimized and the time taken for each command is decreased. The outcome is quicker response times which reduces delays and boosts efficiency.

**Conclusion** In summary we have discussed the main points and findings of our topic. It is

important to remember the key ideas we covered. By understanding these points we can apply this knowledge in real-life situations. Overall the information we explored helps us make better decisions and enhances our understanding of the subject.

With improved input/output multiplexing you will experience faster data processing and shorter wait times all without needing to modify your applications. When you use ElastiCache for Redis 7 you automatically get improved I/O multiplexing in all AWS Regions and there’s no extra charge for it.

“For additional details please refer to the section on Supported node types.” To begin either set up a new cluster or improve an existing one by using the ElastiCache console.

“Keep an eye out for further performance enhancements for ElastiCache for Redis coming soon.”