Enterprise-Grade Secure RAG Infrastructure

We provide fully secure RAG deployment solutions tailored for enterprises with stringent data governance, regulatory compliance, and security requirements. Our on-premise and hybrid cloud architectures ensure complete data sovereignty while maintaining the performance and scalability benefits of modern AI infrastructure.

Deployment Architecture Options

Deployment Model Comparison

| Deployment Model | Best For | Data Location | Control Level | Infrastructure Responsibility |

|---|---|---|---|---|

| Full On-Premise | Highly regulated industries, air-gapped networks | Customer data centers | Complete control | Customer managed |

| Private Cloud | Organizations with existing private cloud investment | Customer private cloud | High control | Shared responsibility |

| Hybrid Cloud | Balance of control and scalability | Split between on-premise and cloud | Granular control | Hybrid management |

| Virtual Private Cloud | Cloud benefits with enhanced isolation | Cloud provider with isolation | Managed control | Primarily cloud provider |

On-Premise Deployment Architecture

Self-Contained RAG Infrastructure

Complete On-Premise Stack

User Access Layer → API Gateway → RAG Processing Layer → Data Layer → Infrastructure Layer

↓ ↓ ↓ ↓ ↓

Internal Users, Authentication & Query Processing, Vector Stores, Kubernetes,

Applications Rate Limiting Retrieval, Databases, Storage,

Generation Knowledge Bases NetworkingCore Infrastructure Components

- Kubernetes Cluster: Managed or self-managed (OpenShift, Rancher, vanilla K8s)

- GPU/CPU Compute: On-premise AI accelerators or standard servers

- Storage Systems: High-performance NAS/SAN for vector storage

- Networking: Secure internal network with optional air-gapped configuration

Hardware & Infrastructure Requirements

Minimum Configuration

Component | Development | Production (Small) | Production (Enterprise)

-----------------------|----------------------|----------------------|-------------------------

Compute Nodes | 3 nodes, 32GB RAM | 5 nodes, 64GB RAM | 10+ nodes, 128GB+ RAM

GPU Acceleration | Optional (1-2 GPUs) | Recommended (4 GPUs) | Required (8+ GPUs)

Storage | 1TB SSD | 5TB NVMe SSD | 50TB+ NVMe SSD cluster

Networking | 1GbE | 10GbE | 25/100GbE with RDMA

High Availability | Basic | Active-Passive | Active-Active multi-siteGPU Optimization

- NVIDIA DGX Systems: Integrated AI infrastructure

- GPU Server Racks: Custom-configured GPU servers

- Inference Optimizers: TensorRT, Triton Inference Server

- Mixed Precision: FP16/INT8 optimization for efficiency

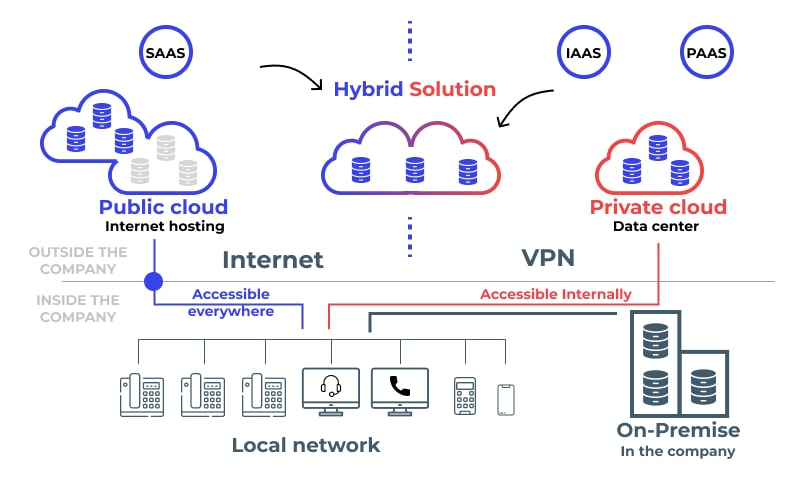

Hybrid Cloud Deployment Architecture

Intelligent Workload Distribution

Strategic Workload Placement

Sensitive Workloads → On-Premise Infrastructure → Secure Data Boundary

↓ ↓ ↓

Data Processing, Dedicated Hardware, Data Never Leaves

Vector Storage, Complete Isolation, Corporate Network

Query Processing Full Control for Sensitive Data

Non-Sensitive Workloads → Cloud Infrastructure → Elastic Scaling

↓ ↓ ↓

Training, Batch Processing, Cloud GPUs, Burst Capacity,

Development, Testing Managed Services, Cost Optimization,

Auto-scaling Global DistributionData Flow Architecture

On-Premise Data Sources → Secure Gateway → Processing Decision → Execution Location

↓ ↓ ↓ ↓

Sensitive Documents, Encrypted Tunnel, Classification: On-Premise for

PII, IP, Regulated Authentication, Sensitive vs. Sensitive Data,

Data Access Control Non-Sensitive Cloud for RestHybrid Integration Patterns

Pattern 1: Data Residency with Cloud Processing

- Data Stays On-Premise: Source documents and vector stores remain on-premise

- Processing in Cloud: LLM inference and non-sensitive processing in cloud

- Secure Data Exchange: Encrypted, temporary data transfer with audit trail

- Compliance: Meets data residency requirements while leveraging cloud scale

Pattern 2: Tiered Storage Architecture

- Hot Data On-Premise: Frequently accessed, sensitive data locally

- Warm/Cold Data in Cloud: Archived, non-sensitive data in cloud storage

- Intelligent Tiering: Automatic data movement based on access patterns

- Cost Optimization: Balance performance requirements with storage costs

Pattern 3: Burst to Cloud

- Baseline On-Premise: Normal workload handled internally

- Cloud Burst Capacity: Peak loads redirected to cloud resources

- Automatic Scaling: Seamless transition based on load thresholds

- Cost-Effective: Pay for cloud only when needed

Security & Access Control Framework

Zero-Trust Security Model

Core Security Principles

- Never Trust, Always Verify: Continuous authentication and authorization

- Least Privilege Access: Minimum permissions required for each component

- Micro-segmentation: Isolated network segments for different components

- Continuous Monitoring: Real-time security event detection and response

Multi-Layer Security Architecture

Perimeter Security → Network Security → Host Security → Application Security → Data Security

↓ ↓ ↓ ↓ ↓

Firewalls, WAF, Segmentation, OS Hardening, Code Scanning, Encryption at Rest

DDoS Protection IDS/IPS, Zero Patching, Input Validation, & in Transit, DLP,

Trust Network EDR Authentication Access Controls

AccessFine-Grained Access Control

Role-Based Access Control (RBAC)

User/Service → Authentication → Authorization Engine → Permission Check → Access Decision

↓ ↓ ↓ ↓ ↓

Identity Verify Credentials Check Roles & Evaluate Specific Grant/Deny Access

(MFA, Certificates) Permissions Resource Access with Audit Logging

Against Policies RightsAttribute-Based Access Control (ABAC)

- User Attributes: Department, clearance level, location

- Resource Attributes: Sensitivity level, classification, owner

- Environmental Attributes: Time of day, network location, threat level

- Policy Engine: Dynamic access decisions based on multiple attributes

Implementation Example: Healthcare Data Access

Doctor Query: "Show me patient 12345's lab results"

→ Authentication: Doctor's credentials + MFA verified

→ Authorization: Check if doctor is assigned to patient

→ Context: Current location = Hospital network, Time = Normal hours

→ Decision: Grant access to specific lab results

→ Logging: Full audit trail with purpose of accessData Governance & Compliance

Regulatory Compliance Framework

Industry-Specific Compliance

- Healthcare (HIPAA): PHI protection, audit trails, access controls

- Financial (SOX, FINRA): Financial data protection, transaction logging

- Government (FedRAMP): Security controls, continuous monitoring

- International (GDPR): Data subject rights, privacy by design

Compliance Automation

Regulatory Requirements → Control Mapping → Automated Checks → Compliance Reporting → Audit Ready

↓ ↓ ↓ ↓ ↓

HIPAA, GDPR, SOC 2, Map to Technical Continuous Real-time Pre-built

ISO 27001, etc. Controls & Verification of Compliance Audit Reports

Configurations Controls Dashboard & EvidenceData Sovereignty & Residency

Geographic Data Control

- Data Residency Enforcement: Ensure data stays in specified jurisdictions

- Cross-Border Transfer Controls: Manage international data movements

- Regional Deployment Options: Multiple geographic deployment sites

- Compliance with Local Laws: Adherence to country-specific regulations

Implementation Features

- Geofencing: Prevent data transfer outside approved regions

- Data Tagging: Automatic classification of data by jurisdiction

- Transfer Approvals: Manual/automated approval for cross-border transfers

- Audit Trail: Complete logging of data location and movements

Network Architecture & Isolation

Secure Network Design

Segmented Network Architecture

DMZ Zone → Application Zone → Data Zone → Management Zone → Internet

↓ ↓ ↓ ↓ ↓

External RAG API, Vector DBs, Admin Access, Limited,

Access Web Apps Document Monitoring, Controlled

Stores Logging OutboundNetwork Security Controls

- Firewall Rules: Application-aware firewall policies

- Network Segmentation: VLANs and micro-segmentation

- Intrusion Detection: Real-time threat detection

- Traffic Encryption: TLS 1.3+ for all communications

Air-Gapped Deployment Option

Fully Isolated Implementation

- Complete Network Isolation: No external network connections

- Physical Media Transfer: Secure data import/export processes

- Internal Certificate Authority: Self-signed certificates

- Manual Updates: Controlled update processes with validation

Use Cases

- Classified government systems

- Financial trading platforms

- Critical infrastructure

- Research with intellectual property protection

Deployment & Management Tooling

Infrastructure as Code

Automated Deployment

Terraform/Ansible → Infrastructure Provisioning → Configuration Management → Validation → Deployment

↓ ↓ ↓ ↓ ↓

Infrastructure Code Compute, Network, Software Installation, Security & Production

Storage Resources Configuration, Updates Compliance Environment

Security Hardening ChecksGitOps Workflow

- Infrastructure Git Repository: Version-controlled infrastructure definitions

- Automated Pipeline: CI/CD for infrastructure changes

- Environment Parity: Identical development, staging, production environments

- Rollback Capability: Quick recovery from failed deployments

Container Management

Kubernetes Implementation

- Private Container Registry: On-premise Docker registry

- Pod Security Policies: Strict security constraints

- Network Policies: Fine-grained network control between pods

- Resource Quotas: CPU, memory, storage limits per namespace

Orchestration Features

- High Availability: Multi-node clusters with automatic failover

- Auto-scaling: Horizontal and vertical pod autoscaling

- Service Mesh: Istio/Linkerd for advanced traffic management

- Monitoring Integration: Prometheus/Grafana for cluster monitoring

Backup & Disaster Recovery

Comprehensive Data Protection

Backup Strategy

Real-time Replication → Scheduled Backups → Off-site Copies → Regular Testing → Quick Recovery

↓ ↓ ↓ ↓ ↓

Synchronous/Asynchronous Full & Incremental Geographic Validation of RTO: <4 hours

Replication to Standby Backups with Redundancy, Backup Integrity RPO: <15 minutes

Systems Retention Policies Immutable & Recovery

Storage ProceduresDisaster Recovery Tiers

Tier 1 (Critical): RTO <1 hour, RPO <5 minutes (Active-Active multi-site)

Tier 2 (Important): RTO <4 hours, RPO <1 hour (Warm standby)

Tier 3 (Standard): RTO <24 hours, RPO <4 hours (Backup restore)Business Continuity Planning

High Availability Design

- Active-Active Clusters: Multiple sites serving live traffic

- Load Balancing: Intelligent traffic distribution

- Database Clustering: Multi-master database configurations

- Geo-Redundancy: Multiple geographic deployment sites

Failover Automation

- Automatic Detection: Service health monitoring

- Intelligent Failover: Traffic redirection based on health status

- State Synchronization: Session state preservation during failover

- Recovery Automation: Automatic service restoration

Performance & Scalability

On-Premise Performance Optimization

Hardware Acceleration

- GPU Optimization: CUDA, TensorRT for inference acceleration

- Storage Acceleration: NVMe, RDMA for high-throughput vector operations

- Network Optimization: SmartNICs, RDMA for low-latency communication

- Memory Optimization: Large RAM configurations for vector caching

Performance Benchmarks

Metric | On-Premise Target | Cloud Equivalent

----------------------------|-----------------------|-----------------

Query Latency (p95) | <150ms | <200ms

Indexing Throughput | 10K docs/hour | 8K docs/hour

Concurrent Users | 5,000+ | 10,000+

Vector Search QPS | 1,000+ | 2,000+Hybrid Scalability Patterns

Elastic Scaling Strategy

On-Premise Baseline → Monitoring → Scale Decision → Scaling Action → Optimization

↓ ↓ ↓ ↓ ↓

Normal Load Performance Threshold Scale Up/Down Cost-Performance

Handled Metrics, Cost Detection On-Premise or Analysis &

Internally Tracking Cloud Resources RebalancingScaling Triggers

- CPU/Memory Utilization: >80% sustained usage

- Query Latency: >300ms average response time

- Queue Depth: Growing pending request queue

- Scheduled Events: Known peak periods

Cost Management & Optimization

Total Cost of Ownership Analysis

Cost Components

Capital Expenditure (CapEx) + Operational Expenditure (OpEx) = Total Cost of Ownership

↓ ↓ ↓

Hardware Purchase, Power, Cooling, Complete 3-5 Year

Software Licenses, Staff, Maintenance, Ownership Cost with

Infrastructure Setup Cloud Services, ROI Calculation

Support ContractsCost Comparison Framework

On-Premise TCO = Hardware + Software + Facilities + Staff + Maintenance

Hybrid TCO = (On-Premise Costs) + (Cloud Usage Costs) - (Efficiency Gains)

Cloud TCO = Subscription Costs + Usage Costs + Data Transfer + ManagementHybrid Cost Optimization

Intelligent Workload Placement

Workload Analysis → Cost Calculation → Placement Decision → Execution → Cost Monitoring

↓ ↓ ↓ ↓ ↓

Compute, Memory, On-Premise vs. Optimal Location Deploy to Track Actual

Storage, Network Cloud Pricing, Based on Cost, Selected Costs vs.

Requirements Data Transfer Performance, Environment Projections

Costs, Licensing SecurityCost Saving Strategies

- Reserved Instances: Long-term commitments for predictable workloads

- Spot Instances: Cost-effective for interruptible batch processing

- Auto-scaling: Right-size resources to actual demand

- Data Tiering: Move infrequently accessed data to lower-cost storage

Implementation Roadmap

Phase 1: Assessment & Design (3-4 Weeks)

Current State Analysis

- Infrastructure assessment and gap analysis

- Security and compliance requirements review

- Data classification and sensitivity analysis

- Performance and scalability requirements

Architecture Design

- Deployment model selection

- Network and security architecture

- Hardware and software specification

- Migration and deployment strategy

Phase 2: Foundation Build (6-8 Weeks)

Infrastructure Setup

- Hardware procurement and installation

- Network configuration and security setup

- Kubernetes cluster deployment

- Storage and backup infrastructure

Core Platform Deployment

- RAG platform installation and configuration

- Security controls implementation

- Monitoring and management tooling

- Initial testing and validation

Phase 3: Data Migration & Integration (4-6 Weeks)

Data Preparation

- Data classification and tagging

- Sensitive data identification

- Migration planning and execution

- Validation and quality assurance

System Integration

- Integration with existing enterprise systems

- Access control integration

- Monitoring and alerting configuration

- User training and documentation

Phase 4: Optimization & Scaling (Ongoing)

Performance Tuning

- Optimization based on real usage

- Scaling adjustments

- Cost optimization

- Continuous improvement

Success Metrics & SLAs

Performance SLAs

- Availability: 99.9% uptime for critical components

- Latency: <200ms p95 response time for queries

- Freshness: <5 minutes for critical data updates

- Recovery: RTO <4 hours, RPO <15 minutes for tier 1 systems

Security & Compliance Metrics

- Security Incidents: <1 critical incident per quarter

- Compliance Adherence: 100% of configured controls operational

- Access Violations: <0.01% of access attempts unauthorized

- Audit Readiness: <24 hours to produce compliance evidence

Business Metrics

- Cost Efficiency: >30% savings vs. public cloud for sensitive workloads

- User Satisfaction: >4.2/5.0 for system reliability and performance

- Adoption Rate: >80% target user adoption within 6 months

- ROI Achievement: >2x return on investment within 18 months

Support & Maintenance

Ongoing Management Services

- 24/7 Monitoring: Proactive system health monitoring

- Security Updates: Regular security patches and updates

- Performance Optimization: Continuous performance tuning

- Capacity Planning: Regular capacity assessment and planning

Enterprise Support Tiers

Tier 1: Basic (8x5, email support, next business day response)

Tier 2: Standard (12x5, phone support, 4-hour response)

Tier 3: Premium (24x7, dedicated engineer, 1-hour response)

Tier 4: Mission Critical (24x7, on-site available, 15-minute response)Our On-Premise/Hybrid Cloud Deployment solutions provide enterprises with the perfect balance of control, security, and scalability—enabling organizations to leverage advanced RAG capabilities while maintaining complete data sovereignty and meeting the most stringent regulatory requirements.