Automated Knowledge Synchronization for Dynamic Enterprise Environments

We build robust document processing and content pipeline systems that maintain your RAG knowledge base in sync with evolving enterprise information sources. Our automation solutions handle both batch processing and real-time streaming updates, ensuring your AI applications operate on current, accurate data.

Architecture Overview

Dual Processing Modes

Batch Processing Pipeline

For comprehensive updates of large document repositories on scheduled intervals

Streaming Processing Pipeline

For real-time synchronization of dynamic content and immediate knowledge incorporation

Core System Components

1. Source Monitoring & Change Detection

Multi-Source Monitoring Engine

- File System Watchers: Real-time monitoring of network drives, SharePoint, and document repositories

- Database Change Tracking: Capture data capture (CDC) for SQL and NoSQL databases

- API Polling Systems: Scheduled checks of external APIs and cloud services

- Web Crawling Infrastructure: Monitoring of public websites and knowledge portals

Change Detection Algorithms

- Hash-based change identification

- Metadata comparison (timestamps, versions, checksums)

- Content diff analysis for partial updates

- Priority scoring for critical document changes

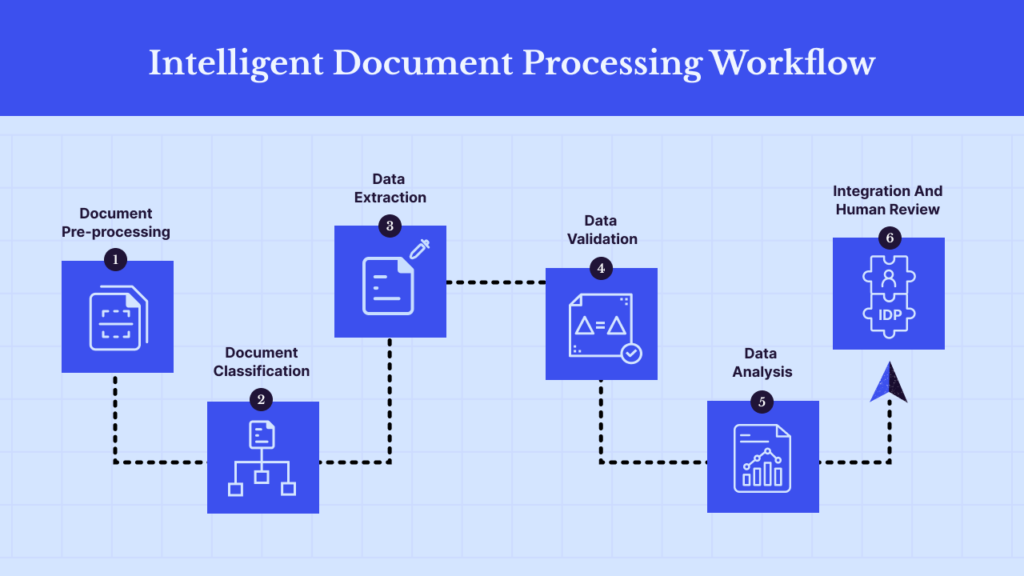

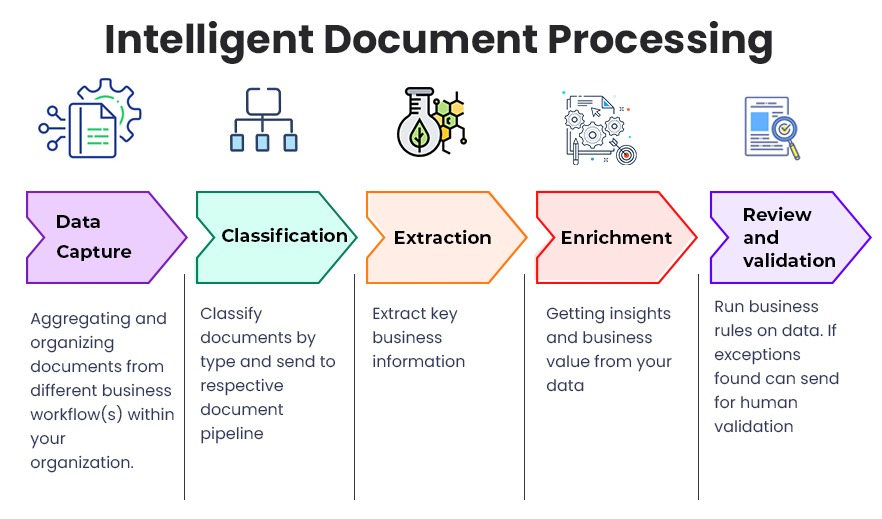

2. Intelligent Document Processing Pipeline

Multi-Stage Processing Architecture

| Processing Stage | Function | Technologies Used |

|---|---|---|

| Ingestion | Collect and queue documents | Apache Kafka, AWS Kinesis, RabbitMQ |

| Validation | Format and integrity checks | Custom validators, schema validation |

| Extraction | Content and metadata extraction | Tika, PDFPlumber, OCR engines |

| Normalization | Standardize format and structure | NLP pipelines, regex patterns |

| Chunking | Semantic segmentation | LangChain, custom chunkers |

| Embedding | Vector generation | Sentence Transformers, OpenAI API |

| Indexing | Vector storage | Pinecone, Weaviate, Qdrant |

3. Workflow Orchestration & Scheduling

Orchestration Platforms

- Apache Airflow for complex DAG management

- Prefect for modern workflow orchestration

- Kubernetes-native solutions (Argo Workflows)

- Custom orchestration for specialized requirements

Scheduling Strategies

- Time-based scheduling (hourly, daily, weekly)

- Event-driven triggering (file uploads, API calls)

- Dependency-based execution

- Manual override and ad-hoc processing capabilities

Batch Processing Implementation

Large-Scale Document Processing

Bulk Processing Architecture

Source Systems → Change Detection → Job Queue → Parallel Processing → Quality Validation → Vector Store UpdateOptimization Techniques

- Parallel Processing: Horizontal scaling across multiple workers

- Incremental Processing: Only process changed documents

- Priority Queuing: Critical documents processed first

- Resource Management: Dynamic allocation based on workload

Batch Processing Use Cases

Regular Knowledge Base Refreshes

- Weekly legal document updates

- Monthly research paper incorporation

- Quarterly policy manual revisions

- Annual report integration

Bulk Onboarding Scenarios

- Initial knowledge base population

- Legacy system migration

- Acquisition integration

- System recovery and rebuild

Streaming Processing Implementation

Real-Time Synchronization

Event-Driven Architecture

- Message brokers for event distribution (Kafka, Redis, SQS)

- Microservices for specialized processing tasks

- Stream processing frameworks (Apache Flink, Spark Streaming)

- Real-time monitoring and alerting

Low-Latency Processing Pipeline

Event Source → Message Queue → Stream Processor → Embedding Service → Vector DB Update → Notification

↓ ↓ ↓ ↓ ↓ ↓

File Upload Kafka Topic Flink Job FastAPI Service Real-time Write Webhook/SlackStreaming Use Cases

Immediate Knowledge Updates

- Customer support ticket resolution incorporation

- Real-time market data integration

- Breaking news and alert integration

- Live meeting notes and decisions

Dynamic Content Sources

- Collaborative document editing (Google Docs, Confluence)

- Social media monitoring and integration

- IoT device data streams

- Transaction processing systems

Quality Assurance & Validation

Automated Quality Gates

Content Validation

- Format compliance checking

- Completeness validation

- Duplicate detection

- Relevance scoring

Processing Validation

- Embedding quality assessment

- Chunk coherence verification

- Metadata accuracy checking

- Index integrity validation

Monitoring & Alerting

Real-Time Dashboards

- Processing pipeline health metrics

- Document throughput and latency

- Error rates and failure patterns

- Resource utilization and cost tracking

Alert Systems

- Processing failure notifications

- Quality threshold breaches

- Performance degradation alerts

- Capacity planning warnings

Scalability & Performance

Horizontal Scaling Architecture

Processing Node Scaling

- Containerized microservices (Docker, Kubernetes)

- Serverless functions for variable workloads

- Auto-scaling based on queue depth

- Geographic distribution for global enterprises

Performance Optimization

- Caching Strategies: Frequently accessed documents and embeddings

- Batching Optimizations: Optimal batch sizes for embedding APIs

- Parallel Embedding: Concurrent processing of multiple documents

- Index Optimization: Efficient vector database write patterns

Capacity Planning

Throughput Benchmarks

- Small-scale: 1,000-10,000 documents/hour

- Medium-scale: 10,000-100,000 documents/hour

- Enterprise-scale: 100,000+ documents/hour

- Peak handling: 5x normal capacity for surge periods

Latency Targets

- Batch processing: <1 hour for 10,000 documents

- Streaming processing: <30 seconds end-to-end

- Priority processing: <5 minutes for critical updates

- Query consistency: Immediate after processing completion

Integration Capabilities

Source System Connectors

Pre-Built Connectors

- Cloud Storage: AWS S3, Azure Blob, Google Cloud Storage

- Collaboration Tools: SharePoint, Confluence, Google Workspace

- Databases: SQL Server, PostgreSQL, MongoDB, Elasticsearch

- CMS Systems: WordPress, Drupal, Contentful

Custom Connector Development

- Proprietary system integration

- Legacy system adapters

- Industry-specific formats

- Custom API integrations

Output & Synchronization

Multi-Destination Support

- Vector database synchronization (primary and replicas)

- Search index updates (Elasticsearch, Solr)

- Cache invalidation and refresh

- External system notifications

Consistency Management

- Transactional updates across systems

- Version control and rollback capabilities

- Conflict resolution for concurrent updates

- Audit trail and change tracking

Security & Compliance

Data Protection

Processing Security

- Secure credential management for source systems

- Encrypted data in transit and at rest

- Isolated processing environments

- Secure deletion of temporary files

Access Control

- Role-based access to processing pipelines

- Source system authentication and authorization

- Processing job authorization and approval

- Audit logging of all pipeline activities

Compliance Features

Regulatory Compliance

- GDPR/CCPA data processing compliance

- HIPAA compliance for healthcare data

- Financial regulations (SOX, FINRA)

- Industry-specific certification support

Audit & Reporting

- Complete processing audit trails

- Compliance reporting automation

- Data lineage and provenance tracking

- Retention policy enforcement

Advanced Features

Intelligent Processing Optimization

Adaptive Processing Rules

- Content type-specific processing pipelines

- Quality-based reprocessing decisions

- Cost optimization for API usage

- Performance tuning based on patterns

Machine Learning Enhancements

- Document classification for optimal processing

- Quality prediction for automated validation

- Failure prediction and prevention

- Resource requirement forecasting

Disaster Recovery & Reliability

High Availability Design

- Redundant processing pipelines

- Geographic failover capabilities

- Data replication and backup

- Automated recovery procedures

Data Consistency Guarantees

- Exactly-once processing semantics

- Order preservation for time-sensitive data

- Conflict detection and resolution

- Recovery point objectives (RPO) and recovery time objectives (RTO)

Management & Operations

Administration Interface

Control Panel Features

- Pipeline configuration and management

- Processing job monitoring and control

- Performance analytics and reporting

- Alert configuration and management

Operational Tools

- Manual override and reprocessing capabilities

- Bulk operations and maintenance tasks

- System health checks and diagnostics

- Capacity planning and resource management

Maintenance & Support

Routine Maintenance

- Regular system updates and patches

- Performance optimization and tuning

- Capacity scaling and rebalancing

- Security updates and compliance checks

Support Services

- 24/7 monitoring and incident response

- Performance consulting and optimization

- Custom development for new requirements

- Training and knowledge transfer

Implementation Roadmap

Phase 1: Foundation (4-6 Weeks)

- Source system analysis and connector development

- Basic batch processing pipeline implementation

- Core document processing capabilities

- Initial monitoring and alerting setup

Phase 2: Enhancement (6-8 Weeks)

- Streaming processing capabilities addition

- Advanced quality assurance implementation

- Performance optimization and scaling

- Integration with existing enterprise systems

Phase 3: Optimization (Ongoing)

- Machine learning enhancements

- Advanced automation and intelligence

- Continuous performance improvement

- Feature expansion and customization

Business Benefits

Operational Efficiency

- 70-80% reduction in manual document processing

- Real-time knowledge availability

- Consistent processing quality

- Reduced operational risk

Cost Optimization

- Reduced manual labor costs

- Optimal cloud resource utilization

- Minimized reprocessing and errors

- Scalable architecture with predictable costs

Strategic Advantage

- Faster time-to-market for new information

- Improved AI application accuracy

- Enhanced compliance and auditability

- Scalable foundation for future AI initiatives

Our Document Processing & Content Pipeline Automation solutions ensure your RAG systems operate on accurate, current information through sophisticated automation that handles the complexity of enterprise knowledge management at scale.