Systematic Performance Measurement for Enterprise RAG Systems

We implement comprehensive evaluation frameworks that continuously assess RAG system performance across multiple quality dimensions. Our LLM-as-a-Judge systems and automated evaluation pipelines provide quantitative metrics for accuracy, relevance, coherence, and retrieval correctness, enabling data-driven optimization of your AI applications.

Evaluation Framework Architecture

Multi-Dimensional Assessment System

Evaluation Layer Architecture

RAG System Output → Multi-Metric Evaluation → Score Aggregation → Performance Dashboard → Optimization Feedback

↓ ↓ ↓ ↓ ↓

User Query/Response Accuracy, Relevance, Weighted Scoring Real-time Metrics Automated Tuning

Coherence, Hallucination, & Trend Analysis Recommendations

Retrieval QualityCore Evaluation Components

| Evaluation Component | Primary Metrics | Assessment Method |

|---|---|---|

| Retrieval Quality | Recall@K, Precision@K, MRR, NDCG | Automated scoring against ground truth |

| Response Accuracy | Factual correctness, Hallucination rate, Citation accuracy | LLM-as-Judge, Human-in-the-loop |

| Relevance & Coherence | Query-response alignment, Logical flow, Context adherence | LLM evaluation, Semantic similarity |

| Practical Utility | User satisfaction, Task completion rate, Helpfulness | User feedback, Task-based evaluation |

| Safety & Compliance | Policy adherence, Toxicity, Bias detection | Rule-based + ML classifiers |

Automated Evaluation Pipelines

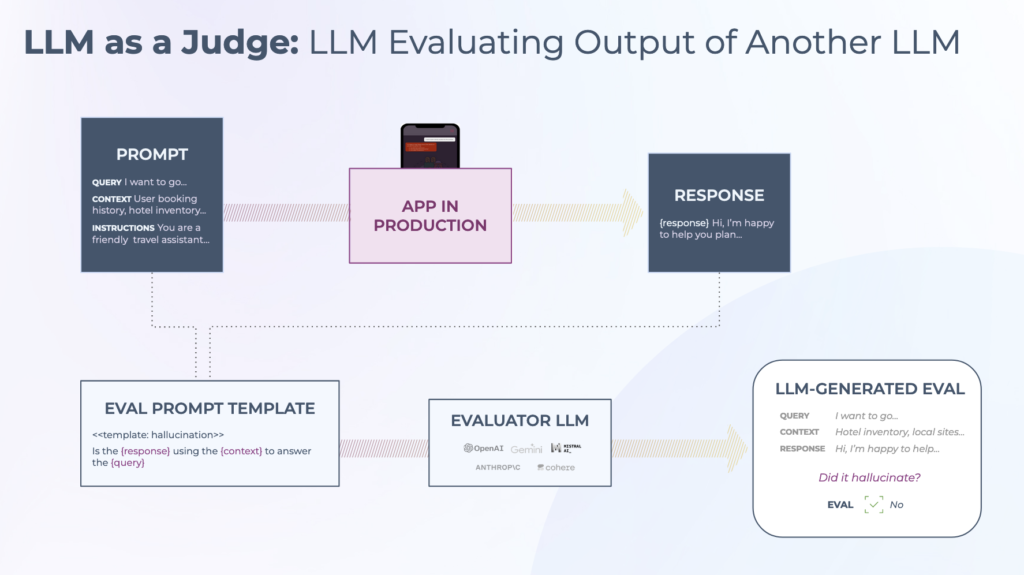

1. LLM-as-a-Judge Framework

Judge LLM Configuration

- Specialized evaluation models fine-tuned for assessment tasks

- Multi-judge ensembles for reduced bias and increased reliability

- Cost-optimized judge selection (smaller models for simple tasks)

- Custom rubric development for domain-specific evaluation

Evaluation Process

Response → Judge LLM Prompt → Rubric Application → Score Generation → Confidence Assessment

↓ ↓ ↓ ↓ ↓

RAG Output Structured Prompt Evaluation Criteria Numerical Score Reliability Metric

with Clear Rubrics (5-10 dimensions) (0-10 scale) (High/Medium/Low)Common Evaluation Rubrics

- Factual Accuracy: 1-5 scale (Completely wrong → Perfectly accurate)

- Relevance: 1-5 scale (Completely off-topic → Perfectly relevant)

- Coherence: 1-5 scale (Incomprehensible → Perfectly logical)

- Completeness: 1-5 scale (Misses key points → Covers all aspects)

- Conciseness: 1-5 scale (Extremely verbose → Perfectly concise)

2. Retrieval Quality Assessment

Automated Retrieval Metrics

- Recall@K: Proportion of relevant documents retrieved in top K

- Precision@K: Proportion of retrieved documents that are relevant

- Mean Reciprocal Rank (MRR): Average of reciprocal ranks of first relevant document

- Normalized Discounted Cumulative Gain (nDCG): Ranking quality considering graded relevance

- Hit Rate: Percentage of queries where at least one relevant document is retrieved

Evaluation Implementation

Query → Retrieval System → Retrieved Documents → Relevance Judgment → Metric Calculation

↓ ↓ ↓ ↓ ↓

User Input Vector Search Top K Results Automated/Manual Performance Scores

(10-20 docs) Relevance Scoring (Daily/Weekly Trends)3. End-to-End System Evaluation

A/B Testing Framework

- Concurrent deployment of multiple RAG configurations

- Random user assignment to different system versions

- Performance comparison across user segments

- Statistical significance testing for improvements

Canary Testing

- Gradual rollout of new system versions

- Performance monitoring during rollout

- Automatic rollback on quality degradation

- Impact measurement on key business metrics

Evaluation Metrics & KPIs

Accuracy & Correctness Metrics

Factual Accuracy Scoring

- Statement-Level Accuracy: Percentage of factual claims that are correct

- Hallucination Rate: Proportion of responses containing unsupported claims

- Citation Precision: Percentage of citations that support the corresponding claim

- Error Severity Classification: Categorization of errors by business impact

Calculation Methods

- Automated fact-checking against knowledge base

- LLM verification with source grounding

- Human evaluation for ambiguous cases

- Confidence scoring with uncertainty estimation

Relevance & Quality Metrics

Response Relevance Assessment

- Query-Response Alignment: Semantic similarity between query and response

- Context Utilization: Percentage of retrieved context effectively used

- Staying on Topic: Measurement of tangential or off-topic content

- User Intent Fulfillment: Degree to which user’s underlying need is addressed

Coherence & Readability

- Logical Flow Score: Assessment of response structure and progression

- Contradiction Detection: Identification of internal inconsistencies

- Grammar & Style: Professional quality of language and formatting

- Tone Appropriateness: Match between response tone and context

Business Impact Metrics

User Experience Indicators

- User Satisfaction Score (USS): Direct user ratings (1-5 scale)

- Task Success Rate: Percentage of queries where user achieves goal

- Engagement Metrics: Session length, follow-up questions, return rate

- Escalation Rate: Percentage of interactions requiring human intervention

Operational Efficiency

- Response Time: End-to-end latency percentiles (p50, p95, p99)

- Cost per Query: Infrastructure and API cost analysis

- Throughput Capacity: Maximum queries per second sustained

- Resource Utilization: Compute and memory efficiency metrics

Continuous Evaluation Infrastructure

Automated Testing Pipeline

Daily Evaluation Runs

- Scheduled evaluation of sample queries (100-1000 daily)

- Performance trending and anomaly detection

- Automated alerting on quality degradation

- Regression test suite for critical use cases

Evaluation Dataset Management

- Golden Dataset: Curated high-quality examples for benchmark testing

- Edge Case Repository: Challenging queries that test system limits

- User Feedback Corpus: Real user queries and satisfaction ratings

- Ablation Test Sets: Controlled variations to isolate component performance

Real-Time Monitoring

Live Performance Dashboard

- Real-time metrics for active conversations

- Quality score streaming with alert thresholds

- User feedback immediate incorporation

- System health and performance monitoring

Anomaly Detection Systems

- Statistical process control for quality metrics

- Pattern recognition for emerging issues

- Predictive alerts before critical failures

- Root cause analysis automation

Advanced Evaluation Techniques

Multi-Judge Ensemble Methods

Diverse Judge Panels

- Different LLM models for varied perspectives

- Specialized judges for different evaluation dimensions

- Human-AI hybrid evaluation workflows

- Cross-validation between judge types

Bias Mitigation Strategies

- Debiasing prompts and rubrics

- Multiple perspective evaluation

- Calibration against human judgments

- Continuous judge performance assessment

Fine-Grained Evaluation

Component-Level Assessment

- Retriever-Only Evaluation: Isolated retrieval performance

- Generator-Only Evaluation: LLM performance with perfect context

- End-to-End Evaluation: Complete system performance

- Ablation Studies: Impact of individual components

Granular Error Analysis

- Error categorization and frequency analysis

- Root cause identification for common failure modes

- Component responsibility attribution

- Targeted improvement recommendations

Integration with Development Lifecycle

CI/CD Pipeline Integration

Automated Quality Gates

- Minimum performance thresholds for deployment

- Regression test requirements

- Performance benchmarking against baselines

- Security and compliance validation

Development Feedback Loops

- Performance impact assessment for code changes

- A/B test results integration

- User feedback to development teams

- Priority scoring for improvement areas

Performance Optimization Workflow

Data-Driven Improvement Cycle

1. Performance Measurement → 2. Root Cause Analysis → 3. Targeted Improvements

↓ ↓ ↓

Identify Weak Areas Diagnose Specific Implement Fixes

with Metrics Component Failures & Optimizations

4. Validation Testing → 5. Deployment → 6. Continuous Monitoring

↓ ↓ ↓

Verify Improvements Rollout to Users Track Impact &

in Controlled Tests Return to Step 1Specialized Industry Evaluation Frameworks

Healthcare & Medical Applications

Clinical Accuracy Evaluation

- Medical fact verification against authoritative sources

- Treatment guideline compliance assessment

- Risk factor identification accuracy

- Diagnostic suggestion appropriateness

Safety & Compliance Metrics

- Harm prevention effectiveness

- Regulatory requirement adherence

- Patient privacy protection

- Ethical guideline compliance

Financial Services Applications

Financial Accuracy Assessment

- Numerical accuracy in calculations

- Regulatory disclosure completeness

- Risk assessment quality

- Market data correctness

Compliance & Risk Metrics

- Regulatory rule violation detection

- Risk disclosure appropriateness

- Financial advice quality standards

- Client suitability assessment

Legal & Compliance Applications

Legal Accuracy Evaluation

- Case law citation correctness

- Statute interpretation accuracy

- Procedural rule compliance

- Legal reasoning quality

Comprehensive Coverage Metrics

- Issue spotting completeness

- Precedent identification relevance

- Jurisdictional appropriateness

- Client-specific adaptation

Reporting & Analytics

Executive Dashboard

High-Level Performance Overview

- Overall system health score

- Trend analysis across key metrics

- Business impact correlation

- ROI calculation and forecasting

Department-Specific Views

- Custom metrics per business unit

- Use case performance breakdown

- Cost-benefit analysis by department

- Adoption and utilization statistics

Detailed Analytics Suite

Deep Dive Analysis Tools

- Query-level performance investigation

- Component failure analysis

- User behavior pattern recognition

- Cost optimization recommendations

Predictive Analytics

- Performance forecasting

- Capacity planning predictions

- User satisfaction modeling

- System scaling requirements

Implementation Roadmap

Phase 1: Foundation (3-4 Weeks)

Basic Evaluation Setup

- Core metrics definition and baseline establishment

- Automated evaluation pipeline for critical use cases

- Basic dashboard and reporting

- Alert system for critical failures

Initial Integration

- CI/CD pipeline quality gates

- Daily performance reporting

- Basic A/B testing capability

- User feedback collection system

Phase 2: Enhancement (4-6 Weeks)

Advanced Evaluation Systems

- LLM-as-a-Judge implementation

- Multi-dimensional scoring

- Advanced retrieval metrics

- Comprehensive error analysis

Optimization Integration

- Data-driven improvement workflows

- Component-level performance tracking

- Advanced anomaly detection

- Predictive performance modeling

Phase 3: Maturity (Ongoing)

Continuous Improvement

- Automated optimization recommendations

- Self-improving evaluation systems

- Cross-system benchmarking

- Industry-specific evaluation frameworks

Enterprise Integration

- Full SDLC integration

- Compliance certification support

- Executive reporting automation

- Strategic planning integration

Success Metrics

Evaluation System Performance

Accuracy & Reliability

- Judge-human correlation: >0.8 agreement rate

- False positive rate: <5% for critical issues

- Coverage: >90% of production queries evaluated

- Latency: <30 seconds for evaluation results

Business Impact

- Quality improvement velocity: 2-3x faster iterations

- Incident reduction: >50% decrease in critical errors

- User satisfaction improvement: >20% increase in satisfaction scores

- Cost optimization: >30% reduction in manual review workload

System Scalability

- Evaluation throughput: 10,000+ queries per hour

- Concurrent evaluations: 100+ simultaneous assessments

- Data storage: 6+ months of detailed evaluation data

- Integration: Seamless with all major RAG platforms

Support & Evolution

Continuous Framework Improvement

Regular Updates

- New metric development based on emerging needs

- Evaluation technique enhancement

- Integration with new LLM capabilities

- Performance optimization

Community & Standards

- Participation in evaluation standards development

- Industry benchmarking participation

- Best practice sharing and adoption

- Research collaboration opportunities

Our LLM Judge & Evaluation Frameworks provide the critical measurement and optimization capabilities needed to ensure your RAG systems deliver consistent, high-quality performance while continuously improving through data-driven insights and automated feedback loops.