Retrieval Augmented Generation (RAG) Consulting Support Services

Home » Retrieval Augmented Generation (RAG) Consulting

For Expert Retrieval Augmented Generation (RAG) & Consulting Support

Get in touch with us

Let's break ice

Email Us

Building a Retrieval-Augmented Generation (RAG) Solution

Retrieval-Augmented Generation (RAG) is a powerful technique that combines the strengths of large language models (LLMs) and information retrieval to generate more accurate, relevant, and informative responses. By leveraging a knowledge base, RAG systems can access and process relevant information, ensuring that the generated content is grounded in factual data.

– Gather diverse data sources (text, images, audio, video)

– Preprocess data (cleaning, deduplication, PII handling)

Data Cleaning: Clean and preprocess the data to remove noise, inconsistencies, and irrelevant information.

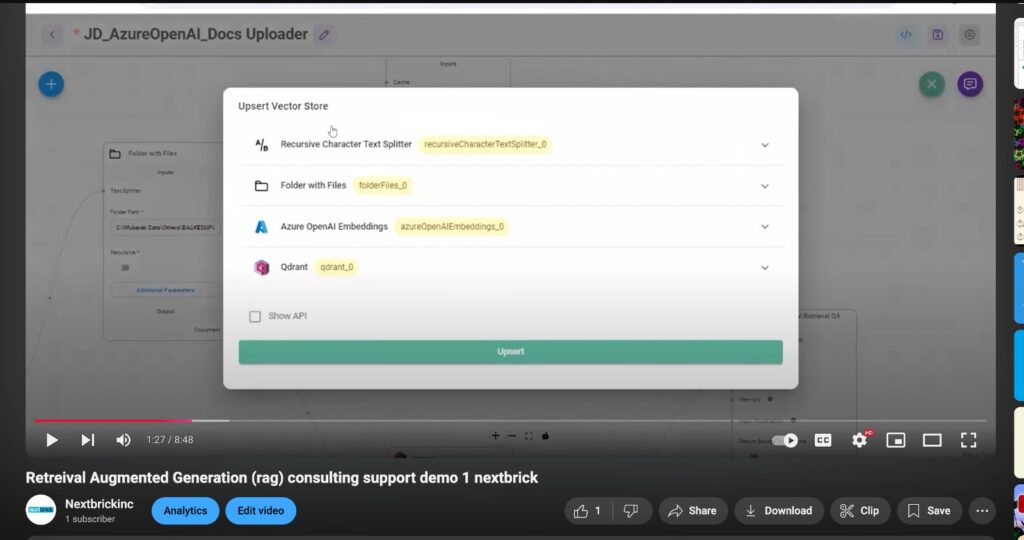

Data Chunking: Break down large documents into smaller, manageable chunks. This can be done based on semantic meaning, paragraph boundaries, or fixed-size chunks.

– Implement multimodal chunking strategies

– Select or fine-tune embedding models for different modalities

– Generate embeddings for all data types

– Experiment with domain-specific embedding models

– Choose a scalable vector database (e.g., Pinecone, Weaviate, Quadrant , MongoDB, Elasticsearch)

– Index embeddings with metadata

– Implement hybrid search capabilities (dense and sparse retrieval)

– Develop query understanding and intent classification

– Implement query expansion and reformulation techniques

– Create multimodal query handling (text, image, voice inputs)

– Implement dense retrieval with customizable parameters

– Develop re-ranking algorithms for improved relevance

– Create ensemble retrieval methods combining multiple strategies

Search Mechanism: Implement hybrid search methods (dense + sparse retrieval) for optimal results

– Design dynamic prompt engineering techniques

– Implement iterative retrieval for complex queries

– Develop context fusion methods for multimodal data

– Select and integrate appropriate LLMs ( OpenAI GPT-4 Anthropic Claude

Google PaLM ,Mistral AI ,Open-source models (LLaMA, Falcon)) for various use cases

– Implement model switching based on query complexity

– Develop fine-tuning pipelines for domain-specific tasks

– Implement multi-step reasoning for complex queries

– Develop fact-checking and hallucination detection mechanisms

– Create response formatting for different output modalities

– Implement comprehensive evaluation metrics (relevance, coherence, factuality)

– Develop feedback loops for continuous improvement

– Optimize system performance and latency

– Design a modular, microservices-based architecture

– Implement caching and load balancing strategies

– Develop monitoring and logging systems for production environments

– Create intuitive interfaces for various use cases (chatbots, search engines, recommendation systems)

– Develop APIs for easy integration with existing systems

– Implement user feedback mechanisms for system improvement

– Implement data encryption and access control measures

– Ensure compliance with relevant regulations (GDPR, CCPA)

– Develop audit trails for data usage and model decisions

~ Testimonials ~

Here’s what our customers have said.

Empowering Businesses with Exceptional Technology Consulting

~ Case Studies~

Retrieval Augmented Generation (RAG) Consulting Support Case Studies

Chatbot Development

System Migration from PHP to Python

RAG case study

RAG definition and proper implementation.

RAG definition and proper implementation.

AI has completely changed the search engine environment, changing the rules for companies much like yours. However, third-party AI tools are insufficient on their own. They don’t comprehend your particular stuff. Presenting Retrieval Augmented Generation (RAG), a revolutionary technology that customizes search results for consumers based on your own data. It’s about meaning, context, and comprehension, not just terms.

However, you will undoubtedly have questions as you traverse this new territory:

However, you will undoubtedly have questions as you traverse this new territory:

- How does the magic happen, and what is RAG?

Consider a robust search engine that is tuned on your material rather than the broad, general internet. That is the secret sauce of RAG.

- Does this imply that keywords are going extinct?

Not exactly. Consider RAG to be your new AI-powered search application pilot, with keyword search remaining the co-pilot.

- What is the reward?

Increased productivity, improved client experiences, and previously undiscovered insights. RAG reveals the latent potential in your data, enabling well-informed choices and propelling company expansion.

- Difficulties?

We have witnessed them all. With hundreds of successful search installations under our belt and 25 years of experience,Nextbrick has overcome every challenge imaginable. We will support you at every stage to make sure you only use RAG once and correctly.

- Are you prepared to unleash the potential of search powered by AI?

Continue reading to find out more. Then get in touch with us, and we’ll assist you in customizing RAG to meet your unique requirements, opening up a new age of search effectiveness and commercial success.

RAG: What is it?

RAG is a new method of natural language processing (NLP) that combines the accuracy of information retrieval systems with the strength of large language models (LLMs). Text, code, photos, and even sounds are among the vast amounts of content used to train RAG models. They frequently make use of pre-existing general LLMs from Amazon, Microsoft / OpenAI, Google, or Meta. However, they also have access to an outside knowledge base, which enables them to produce more precise and instructive answers.

How does vector search relate to RAG, and what is it?

A strong method for locating related things in big datasets, particularly unstructured data like text, photos, and audio, is vector search. It captures the context and meaning of the material using mathematical vectors rather than keywords. Our blog, Vector Search vs. Keyword Search, has further information.

RAG is essentially a vector search use case. A key component of the Retrieval-Augmented Generation (RAG) architecture is vector search. It serves as the information retrieval engine that powers the large language model (LLM), which is in charge of producing answers.

Our comprehensive blog post describing Retrieval Augmented Generation has additional information about the RAG architecture and the use of vector search.

Why use RAG?

To put it simply, RAG is an architecture that uses artificial intelligence (AI) to let people search and respond to queries based on your content. Additionally, it lessens the possibility of “hallucinations,” in which generative AI generates unwanted or inaccurate responses to queries that are submitted.

This is essential for assisting you in maintaining control and providing your users with the ideal experience, whether you are running search on an e-commerce website, support portal, or specialist knowledge application. RAG also enables you to develop a new conversational search paradigm that can be implemented in mobile applications, chatbots, support assistants, and search bards.

Will Keyword Search be replaced by RAG?

The short answer is no. Many customers will still choose a hybrid search solution that uses both AI and conventional keyword search, even though some applications would be appropriate for a pure RAG implementation. The capability to pose sophisticated queries, such as “which running shoes are the best-selling?However, they will also want to enter more conventional search keywords, such as “black Nike running shoes.”

Advantages of Using Hybrid Search and RAG

In addition to the apparent advantage of providing consumers with responses based solely on your content, your applications can provide:

More precise and pertinent results: RAG and Hybrid Search focus on what consumers need, comprehending the context and subtleties of their inquiries, saving time spent sorting through pointless links and lowering the possibility of “hallucinations.”

Personalized insights: Receive information based on the interests and needs of a particular user.RAG can provide customized information by taking into account your users’ preferences and prior knowledge by using their query history.

Increased productivity: Make use of generative models’ potential.RAG can use the information it has acquired to produce entertaining and natural-sounding summaries of original content or even responses to in-depth queries.

Better data integration: Easily include domain-specific or private information in your search.Other sources that are not available to conventional search engines can be accessed and utilized by RAG.

Increased auditability and transparency: Recognize where your information comes from.RAG promotes confidence and trust by enabling you to track the retrieved documents that were utilized to produce your results.

The difficulties and dangers of retrieval augmented generation

Although using RAG and hybrid search has many exciting advantages, there are drawbacks to take into account.

Even while it could appear that AI diminishes the significance of dictionaries, ontologies, and metadata, content processing is still crucial because neglecting them can result in inaccurate search results and responses. Additionally, introducing inappropriate content can make the “hallucination” issue worse rather than better.

Performance and cost can be affected by LLMs: As a third party added to your architecture, LLMs may cause unforeseen performance problems and raise your search application’s operating expenses.

The implementation of hybrid search with RAG still necessitates specialist search knowledge, such as managing search indexes, building searchable vector databases, and designing user interfaces (UIs) that can incorporate faceting and other capabilities in addition to a basic chat-like interface.

Our blog post, “5 Common Challenges Implementing Retrieval Augmented Generation” (RAG), has considerably additional information.

Through trial and error, RAG can be implemented numerous times. or withNextbrick once.

Early on, using AI to create innovative search solutions can seem deceptively amusing. Do you recall the “plug-and-play” claims made by early search vendors? Even while open-source solutions like Elasticsearch and Solr provide flexibility, overcoming the challenges of AI search to attain enterprise-grade security, performance, and scalability is still quite difficult.

We atNextbrick help close the gap between the potential of AI and its practical application in production. Leading companies like Mintel have benefited from our assistance in utilizing Retrieval Augmented Generation (RAG) to transform their search platforms. We’re now applying this knowledge to manufacturers, merchants, and information portals to help them prosper in the era of AI-powered search.

Our integration of managed services, supplementary technology, and in-depth consulting and support and support guarantees a seamless transition from vision to significant outcomes. For a customized consulting and support and support and to learn how AI search may improve your company, get in touch with us.

Professional Services & Consulting and support / AI Consulting and support

CONSULTING AND SUPPORT FOR GENERATION AIDED BY RETRIEVAL

We are your Retrieval-Augmented Generation (RAG) solution implementation partner.

Get in touch with us

Schedule a Meeting

Get a RAG solutions demo.