Retrieval Augmented Generation (RAG) Consulting Support Services

Home » Retrieval Augmented Generation (RAG) Consulting

For Expert Retrieval Augmented Generation (RAG) & Consulting Support

Get in touch with us

Let's break ice

Email Us

Building a Retrieval-Augmented Generation (RAG) Solution

Retrieval-Augmented Generation (RAG) is a powerful technique that combines the strengths of large language models (LLMs) and information retrieval to generate more accurate, relevant, and informative responses. By leveraging a knowledge base, RAG systems can access and process relevant information, ensuring that the generated content is grounded in factual data.

– Gather diverse data sources (text, images, audio, video)

– Preprocess data (cleaning, deduplication, PII handling)

Data Cleaning: Clean and preprocess the data to remove noise, inconsistencies, and irrelevant information.

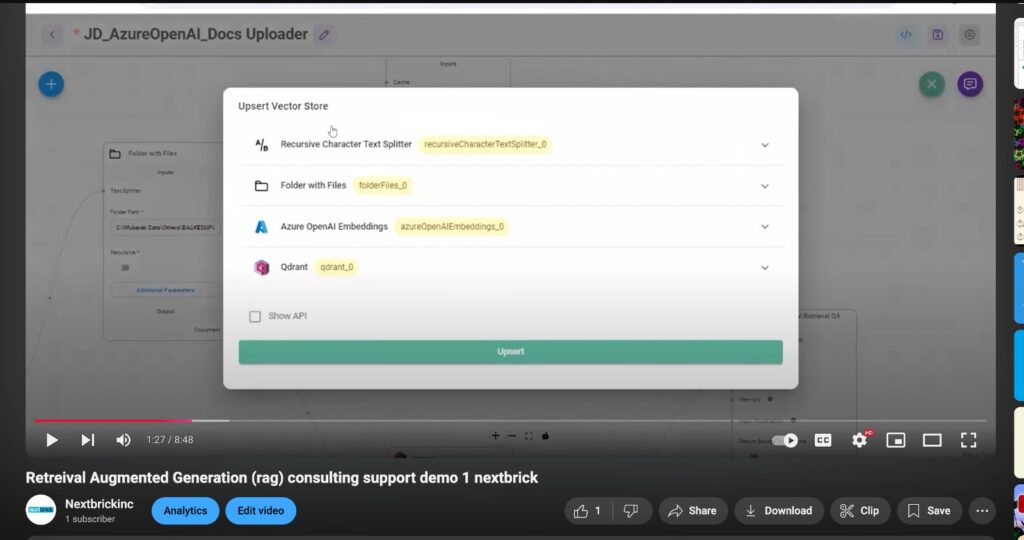

Data Chunking: Break down large documents into smaller, manageable chunks. This can be done based on semantic meaning, paragraph boundaries, or fixed-size chunks.

– Implement multimodal chunking strategies

– Select or fine-tune embedding models for different modalities

– Generate embeddings for all data types

– Experiment with domain-specific embedding models

– Choose a scalable vector database (e.g., Pinecone, Weaviate, Quadrant , MongoDB, Elasticsearch)

– Index embeddings with metadata

– Implement hybrid search capabilities (dense and sparse retrieval)

– Develop query understanding and intent classification

– Implement query expansion and reformulation techniques

– Create multimodal query handling (text, image, voice inputs)

– Implement dense retrieval with customizable parameters

– Develop re-ranking algorithms for improved relevance

– Create ensemble retrieval methods combining multiple strategies

Search Mechanism: Implement hybrid search methods (dense + sparse retrieval) for optimal results

– Design dynamic prompt engineering techniques

– Implement iterative retrieval for complex queries

– Develop context fusion methods for multimodal data

– Select and integrate appropriate LLMs ( OpenAI GPT-4 Anthropic Claude

Google PaLM ,Mistral AI ,Open-source models (LLaMA, Falcon)) for various use cases

– Implement model switching based on query complexity

– Develop fine-tuning pipelines for domain-specific tasks

– Implement multi-step reasoning for complex queries

– Develop fact-checking and hallucination detection mechanisms

– Create response formatting for different output modalities

– Implement comprehensive evaluation metrics (relevance, coherence, factuality)

– Develop feedback loops for continuous improvement

– Optimize system performance and latency

– Design a modular, microservices-based architecture

– Implement caching and load balancing strategies

– Develop monitoring and logging systems for production environments

– Create intuitive interfaces for various use cases (chatbots, search engines, recommendation systems)

– Develop APIs for easy integration with existing systems

– Implement user feedback mechanisms for system improvement

– Implement data encryption and access control measures

– Ensure compliance with relevant regulations (GDPR, CCPA)

– Develop audit trails for data usage and model decisions

~ Testimonials ~

Here’s what our customers have said.

Empowering Businesses with Exceptional Technology Consulting

~ Case Studies~

Retrieval Augmented Generation (RAG) Consulting Support Case Studies

Chatbot Development

System Migration from PHP to Python

RAG case study

Retrieval-Augmented Generation: What is it?

A large language model’s output is optimized by a technique called retrieval-augmented generation (RAG), which consults a reliable knowledge base outside of its training data sources before producing a response. In order to produce unique output for tasks like answering questions, translating languages, and finishing sentences, large language models (LLMs) are trained on enormous amounts of data and employ billions of parameters. Without requiring the model to be retrained, RAG expands the already potent capabilities of LLMs to certain domains or an organization’s own knowledge base. It is an economical method of enhancing LLM output to ensure that it is accurate, pertinent, and helpful in a variety of settings.

What is the significance of retrieval-augmented generation?

Intelligent chatbots and other natural language processing (NLP) applications rely on LLMs, a crucial piece of artificial intelligence (AI) technology. By referencing reliable knowledge sources, the objective is to develop bots that can respond to user inquiries in a variety of situations. Unfortunately, LLM replies are unpredictable due to the nature of LLM technology. Furthermore, LLM training data introduces a cut-off date on the information it possesses and is stagnant.

Known difficulties faced by LLMs include:

- giving misleading information when it lacks the solution.

- giving the consumer generic or outdated information when they’re expecting a precise, up-to-date response.

- using non-authoritative sources to create a response.

- When various training sources use the same terminology to discuss distinct topics, it might lead to erroneous responses.

- The Large Language Model can be compared to an overly eager new hire who will constantly respond to questions with complete assurance, yet refusing to keep up with current affairs. Unfortunately, you don’t want your chatbots to adopt such a mindset because it can undermine consumer trust!

- One method for addressing some of these issues is RAG. It reroutes the LLM to obtain pertinent data from reliable, pre-selected knowledge sources. Users learn more about how the LLM creates the response, and organizations have more control over the generated text output.

What advantages does retrieval-augmented generation offer?

RAG technology enhances an organization’s generative AI initiatives in a number of ways.

Implementation that is economical

Usually, a foundation model is used to start the chatbot development process. LLMs trained on a wide range of generalized and unlabeled data are known as foundation models (FMs), which are accessible via APIs. Retraining FMs for domain-specific or organization-specific knowledge comes at a high computational and financial expense. A more economical method of adding new data to the LLM is RAG. It increases the general usability and accessibility of generative artificial intelligence (generative AI) technologies.

Current data

Maintaining relevancy might be difficult, even if the original training data sources for an LLM are appropriate for your purposes. RAG enables developers to feed the generative models with the most recent data, statistics, or research. The LLM can be immediately connected to news websites, social media feeds, and other regularly updated information sources using RAG. The users can then receive the most recent information from the LLM.

Increased user confidence

The LLM can provide precise information with source credit because to RAG. Citations or references to sources may be included in the output. If users need more information or clarity, they can also search for source papers on their own. Your generative AI solution may gain more credibility as a result.

Greater authority for developers

Developers may more effectively test and enhance their chat applications with RAG. To accommodate shifting needs or cross-functional use, they have the ability to manage and modify the LLM’s information sources. Developers can also make sure the LLM produces the right answers and limit the retrieval of sensitive data to various authorization levels. Additionally, if the LLM cites inaccurate sources of information for particular queries, they can troubleshoot and resolve the issue. Businesses may use generative AI technology with greater assurance for a wider variety of uses.

What is the process of Retrieval-Augmented Generation?

Without RAG, the LLM uses the input from the user to generate a response based on what it already knows or what it was trained on. RAG introduces an information retrieval component that first pulls information from a new data source using user input. The LLM receives both the user query and the pertinent data. Better replies are produced by the LLM using the training data and the new information. An outline of the procedure is given in the parts that follow.

Construct external data

External data is the fresh information that was not included in the LLM’s initial training data set. It may originate from a variety of data sources, including databases, document repositories, and APIs. The information could be in the form of files, database entries, or lengthy text, among other formats. Data is transformed into numerical representations and stored in a vector database using an additional AI technique known as embedding language models. The generative AI models can comprehend the knowledge library that is produced by this method.

Obtain pertinent data

Doing a relevance search is the next step. After being transformed into a vector representation, the user query is compared to the vector databases. Take, for instance, a clever chatbot that can respond to inquiries from a company’s human resources department. The system will fetch annual leave policy documents and the employee’s previous leave history if an employee searches for “How much annual leave do I have?” Since these particular documents are extremely pertinent to the employee’s contribution, they will be returned. Mathematical vector calculations and representations were used to determine the relevancy.

Enhance the LLM prompt

The RAG model then adds the pertinent recovered data in context to the user input (or prompts). In order to efficiently connect with the LLM, this stage makes use of quick engineering techniques. The huge language models can produce precise responses to user inquiries thanks to the augmented prompt.

Revise external data

What if the external data becomes outdated? That might be the next question. Update the documents and their embedding representation asynchronously to preserve up-to-date information for retrieval. Either periodic batch processing or automated real-time methods can do this. This is a typical problem in data analytics; there are various data-science methods for handling change.

The conceptual flow of employing RAG with LLMs is depicted in the following diagram.

What distinguishes semantic search from retrieval-augmented generation?

For companies wishing to include extensive external knowledge sources into their LLM applications, semantic search improves RAG results. Manuals, frequently asked questions, research reports, customer service manuals, and human resource document repositories are just a few examples of the massive volumes of information that modern businesses keep on file across multiple systems. At scale, context retrieval is difficult, which reduces the quality of the generating output.

Semantic search algorithms can more precisely retrieve data by scanning vast databases of heterogeneous information. For instance, by mapping the query to the pertinent documents and returning particular text rather than search results, they can respond to queries like “How much was spent on machinery repairs last year?” Developers can then utilize that response to provide the LLM further context.

In contrast, semantic search technologies handle all the work of knowledge base preparation so developers don’t have to, and they also generate semantically relevant passages and token words ordered by relevance to maximize the quality of the RAG payload. Conventional or keyword search solutions in RAG produce limited results for knowledge-intensive tasks, and developers also have to deal with word embeddings, document chunking, and other complexities as they manually prepare their data.

How can AWS help you meet your needs for Retrieval-Augmented Generation?

With knowledge bases for Amazon Bedrock, you can connect FMs to your data sources for RAG in a matter of clicks, and vector conversions, retrievals, and enhanced output generation are all handled automatically. Amazon Bedrock is a fully-managed service that provides a variety of high-performing foundation models—along with a wide range of capabilities—to build generative AI applications while streamlining development and preserving privacy and security.

Amazon Kendra, a machine learning-powered enterprise search service with high accuracy for businesses handling their own RAG, offers an optimized Kendra Retrieve API that can be used with Amazon Kendra’s high-accuracy semantic ranker as an enterprise retriever for your RAG workflows. For instance, using the Retrieve API, you can:

Using relevance-based sorting, retrieve up to 100 semantically relevant paragraphs with a maximum of 200 token words each.

Make use of pre-made connectors to well-known data technologies, such as Confluence, SharePoint, Amazon Simple Storage Service, and other websites.

Numerous document formats, including HTML, Word, PowerPoint, PDF, Excel, and text files, are supported.

Respondents should be filtered according to the documents that the end-user permissions permit.

For businesses looking to develop more specialized generative AI solutions, Amazon also provides options. Amazon SageMaker JumpStart is an ML hub that includes prebuilt ML solutions, built-in algorithms, and FMs that you can deploy with a few clicks. By using pre-existing SageMaker notebooks and code examples, you can expedite the implementation of RAG.