Retrieval Augmented Generation (RAG) Consulting Support Services

Home » Retrieval Augmented Generation (RAG) Consulting

For Expert Retrieval Augmented Generation (RAG) & Consulting Support

Get in touch with us

Let's break ice

Email Us

Building a Retrieval-Augmented Generation (RAG) Solution

Retrieval-Augmented Generation (RAG) is a powerful technique that combines the strengths of large language models (LLMs) and information retrieval to generate more accurate, relevant, and informative responses. By leveraging a knowledge base, RAG systems can access and process relevant information, ensuring that the generated content is grounded in factual data.

– Gather diverse data sources (text, images, audio, video)

– Preprocess data (cleaning, deduplication, PII handling)

Data Cleaning: Clean and preprocess the data to remove noise, inconsistencies, and irrelevant information.

Data Chunking: Break down large documents into smaller, manageable chunks. This can be done based on semantic meaning, paragraph boundaries, or fixed-size chunks.

– Implement multimodal chunking strategies

– Select or fine-tune embedding models for different modalities

– Generate embeddings for all data types

– Experiment with domain-specific embedding models

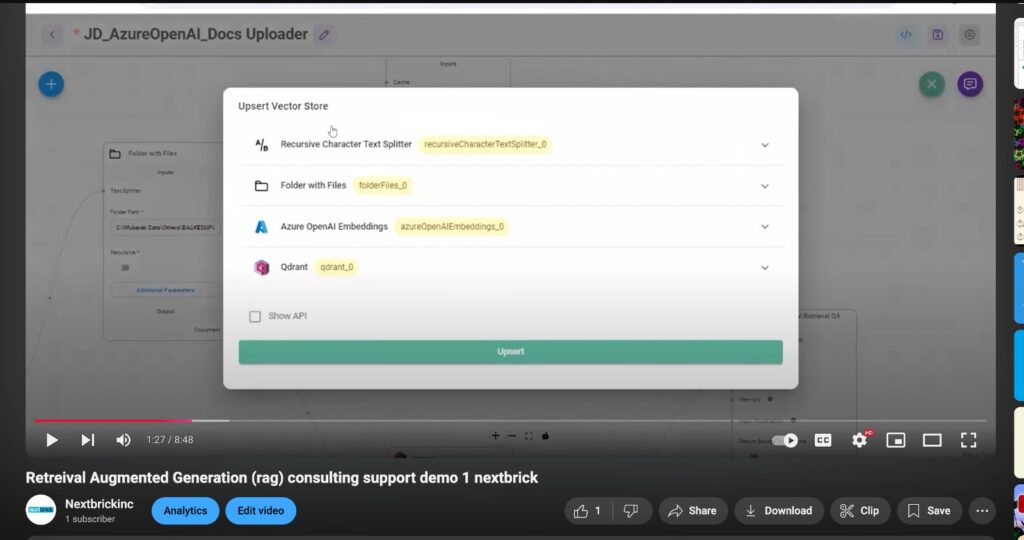

– Choose a scalable vector database (e.g., Pinecone, Weaviate, Quadrant , MongoDB, Elasticsearch)

– Index embeddings with metadata

– Implement hybrid search capabilities (dense and sparse retrieval)

– Develop query understanding and intent classification

– Implement query expansion and reformulation techniques

– Create multimodal query handling (text, image, voice inputs)

– Implement dense retrieval with customizable parameters

– Develop re-ranking algorithms for improved relevance

– Create ensemble retrieval methods combining multiple strategies

Search Mechanism: Implement hybrid search methods (dense + sparse retrieval) for optimal results

– Design dynamic prompt engineering techniques

– Implement iterative retrieval for complex queries

– Develop context fusion methods for multimodal data

– Select and integrate appropriate LLMs ( OpenAI GPT-4 Anthropic Claude

Google PaLM ,Mistral AI ,Open-source models (LLaMA, Falcon)) for various use cases

– Implement model switching based on query complexity

– Develop fine-tuning pipelines for domain-specific tasks

– Implement multi-step reasoning for complex queries

– Develop fact-checking and hallucination detection mechanisms

– Create response formatting for different output modalities

– Implement comprehensive evaluation metrics (relevance, coherence, factuality)

– Develop feedback loops for continuous improvement

– Optimize system performance and latency

– Design a modular, microservices-based architecture

– Implement caching and load balancing strategies

– Develop monitoring and logging systems for production environments

– Create intuitive interfaces for various use cases (chatbots, search engines, recommendation systems)

– Develop APIs for easy integration with existing systems

– Implement user feedback mechanisms for system improvement

– Implement data encryption and access control measures

– Ensure compliance with relevant regulations (GDPR, CCPA)

– Develop audit trails for data usage and model decisions

~ Testimonials ~

Here’s what our customers have said.

Empowering Businesses with Exceptional Technology Consulting

~ Case Studies~

Retrieval Augmented Generation (RAG) Consulting Support Case Studies

Chatbot Development

System Migration from PHP to Python

RAG case study

RAG stands for retrieval-augmented generation.

Check out this e-book to discover your “Enterprise Truth.” RAG (Retrieval-Augmented Generation) is an AI framework that combines the advantages of generative large language models (LLMs) with the strengths of traditional information retrieval systems (like search and databases). By fusing your data and world knowledge with LLM language skills, grounded generation is more accurate, current, and pertinent to your particular needs.

What is the process of Retrieval-Augmented Generation?

- Pre-processing and retrieval: RAGs use robust search algorithms to query databases, knowledge bases, and web sites. After the pertinent data is obtained, it is subjected to pre-processing procedures such tokenization, stemming, and stop word removal.

- Grounded generation: After the pre-processed retrieved data has been smoothly integrated into the pre-trained LLM, the LLM’s context is improved, giving it a more thorough grasp of the subject. This enhanced context allows the LLM to produce more accurate, educational, and captivating responses.

Retrieval is typically handled by a semantic search engine that uses embeddings stored in vector databases and sophisticated ranking and query rewriting features to ensure that the results are relevant to the query and will answer the user’s question. RAG works by first retrieving relevant information from a database using a query generated by the LLM, and then integrating this retrieved information into the LLM’s query input, enabling it to generate more accurate and contextually relevant text.

Why Make Use of RAG?

RAG has a number of benefits that enhance conventional text generation techniques, particularly when working with factual data or data-driven responses. The following are some of the main reasons that RAG can be useful:

Availability of new information

By giving LLMs access to the most recent data, RAG helps them overcome the limitation of LLMs to their pre-trained data, which can result in responses that are out of date and possibly erroneous.

Grounding in facts

Because LLMs are trained on vast volumes of text data, which may contain biases or mistakes, they can occasionally suffer with factual accuracy, despite being effective tools for producing imaginative and captivating literature

The key to this strategy is making sure that the LLM receives the most pertinent facts and that the LLM output is fully based on those facts, all the while responding to the user’s query, following system guidelines, and respecting safety precautions. Giving the LLM “facts” as part of the input prompt can help reduce “gen AI hallucinations.”

Gemini’s long context window (LCW) is an excellent way to supply the LLM with source materials. If you need to scale your performance or offer more information than the LCW can accommodate, you can utilize a RAG approach, which will save you money and time by reducing the amount of tokens.

Utilize vector databases and relevancy re-rankers for your search.

Multi-modal embeddings can be used for images, audio, and video, among other media, and these media embeddings can be retrieved alongside text embeddings or multi-language embeddings. RAGs typically retrieve facts through search, but contemporary search engines now use vector databases to efficiently retrieve relevant documents. Vector databases store documents as embeddings in a high-dimensional space, allowing for quick and accurate retrieval based on semantic similarity.

Semantic search and keyword search are combined in advanced search engines like Vertex AI Search (also known as hybrid search), and a re-ranker scores search results to make sure the top results are the most relevant. Additionally, searches work best with a clear, focused query that is free of misspellings; therefore, advanced search engines will transform a query and correct spelling errors before looking it up.

Quality, correctness, and relevance

RAG’s retrieval mechanism is crucial; to make sure that the information retrieved is pertinent to the input query or context, you need the best semantic search on top of a carefully curated knowledge base. If the information retrieved is irrelevant, your generation may be grounded but inaccurate or off-topic.

RAG helps to reduce contradictions and inconsistencies in the generated text by optimizing or prompt-engineering the LLM to produce text solely based on the returned knowledge. This greatly enhances the generated text’s quality and user experience.

The Vertex Eval Service now rates LLM generated text and retrieved chunks based on metrics such as “coherence,” “fluency,” “groundedness,” “safety,” “instruction_following,” “question_answering_quality,” and more. These metrics assist you in measuring the grounded text you receive from the LLM (for some metrics, that is a comparison to a ground truth answer you have provided). By putting these evaluations into practice, you can optimize for RAG quality by adjusting your search engine, curating your source data, enhancing source layout parsing or chunking strategies, or improving the user’s query before search. A RAG Ops, metrics-driven approach such as this will help you climb the ladder to high quality RAG and grounded generation.

Chatbots, agents, and RAGs

Any LLM application or agent that requires access to new, confidential, or specialized data can incorporate RAG and grounding. By gaining access to external data, RAG-powered chatbots and conversational agents use that knowledge to deliver more thorough, educational, and context-aware responses, enhancing the user experience overall.

What sets your gen AI project apart are your use case and data. RAG and grounding easily and scalable deliver your data to LLMs.

Amazon Kendra, a machine learning-powered enterprise search service with high accuracy for businesses handling their own RAG, offers an optimized Kendra Retrieve API that can be used with Amazon Kendra’s high-accuracy semantic ranker as an enterprise retriever for your RAG workflows. For instance, using the Retrieve API, you can:

Using relevance-based sorting, retrieve up to 100 semantically relevant paragraphs with a maximum of 200 token words each.

Make use of pre-made connectors to well-known data technologies, such as Confluence, SharePoint, Amazon Simple Storage Service, and other websites.

Numerous document formats, including HTML, Word, PowerPoint, PDF, Excel, and text files, are supported.

Respondents should be filtered according to the documents that the end-user permissions permit.

For businesses looking to develop more specialized generative AI solutions, Amazon also provides options. Amazon SageMaker JumpStart is an ML hub that includes prebuilt ML solutions, built-in algorithms, and FMs that you can deploy with a few clicks. By using pre-existing SageMaker notebooks and code examples, you can expedite the implementation of RAG.

Which services and products offered by Google Cloud are associated with RAG?

The Google Cloud products listed below are associated with Retrieval-Augmented Generation.

- AI Vertex Search

Vertex AI Search is a fully managed, unconventional search and RAG builder that functions similarly to Google Search for your data.

- Vertex AI Vector Search

Vertex AI Search is powered by an incredibly efficient vector index that allows for semantic and hybrid search and retrieval from massive embedding sets with great recall at high query rates.

- BigQuery

Vertex AI Vector Search models are among the many large datasets available for machine learning model training.

- API for Grounded Generation

Bring your own search engine or use Google Search as the foundation for Gemini high-fidelity mode.

- AlloyDB

Run models in Vertex AI and use your own custom models or Google models like Gemini to access them in your application using well-known SQL queries.

- The Vertex LlamaIndex

Using Google or open source components and our fully managed orchestration system built on LlamaIndex, create your own RAG and grounding search engine.