Retrieval Augmented Generation (RAG) Consulting Support Services

Home » Retrieval Augmented Generation (RAG) Consulting

For Expert Retrieval Augmented Generation (RAG) & Consulting Support

Get in touch with us

Let's break ice

Email Us

What is RAG, or retrieval-augmented generation?

A method for improving the precision and dependability of generative AI models using data retrieved from outside sources is called retrieval-augmented generation (RAG).

Consider a courtroom to comprehend the most recent development in generative AI.

When a case, such as a labor dispute or malpractice action, calls for specialized knowledge, judges send court clerks to a law library to find precedents and specific cases they can quote. Judges hear cases and make decisions based on their general knowledge of the law.

Large language models (LLMs) can answer a wide range of human questions, much like a good judge, but they require an assistant to conduct some study in order to provide authoritative responses that name sources.

The process known as retrieval-augmented generation, or RAG for short, is the court clerk of artificial intelligence.

~ Testimonials ~

Here’s what our customers have said.

Empowering Businesses with Exceptional Technology Consulting

~ Case Studies~

Retrieval Augmented Generation (RAG) Consulting Support Case Studies

Chatbot Development

System Migration from PHP to Python

RAG case study

How "RAG" Got Its Name

The primary author of the 2020 article that first used the phrase, Patrick Lewis, expressed regret over the disparaging acronym, which today refers to a developing family of techniques found in hundreds of publications and dozens of for-profit services that he feels will shape generative AI in the future.

Lewis, Patrick

In an interview from Singapore, where he was presenting his ideas to a regional meeting of database developers, Lewis stated, “We definitely would have put more thought into the name had we known our work would become so widespread.”

Lewis, who currently heads a RAG team at AI company Cohere, stated, “We always planned to have a nicer sounding name, but when it came time to write the paper, no one had a better idea.”

RAG stands for retrieval-augmented generation.

A method for improving the precision and dependability of generative AI models using data retrieved from outside sources is called retrieval-augmented generation (RAG).

To put it another way, it bridges a gap in the way LLMs function. LLMs are neural networks at their core, and their number of parameters is usually a measure of how well they capture the broad patterns of how people use words to construct sentences.

Although this deep information, sometimes referred to as parameterized knowledge, helps LLMs answer to generic prompts quickly, it is not helpful for users who wish to delve deeper into a more specialized or current topic.

Integrating External and Internal Resources

Retrieval-augmented generation was created by Lewis and associates to connect generative AI services to outside sources, particularly those that include up-to-date technical information.

Because RAG may be used by almost any LLM to interact with almost any external resource, the article, which had coauthors from University College London, New York University, and the former Facebook AI Research (now Meta AI), referred to it as “a general-purpose fine-tuning recipe.”

Increasing User Confidence

By providing models with citations, such as footnotes in a research paper, retrieval-augmented generation enables users to verify any assertions, so fostering confidence.

Additionally, the strategy can help models resolve ambiguity in a user question and decrease the likelihood of a model guessing incorrectly, a phenomena known as hallucination.

RAG’s relative simplicity is another fantastic benefit; according to a blog post by Lewis and three of the paper’s coauthors, developers can use as few as five lines of code to implement the process.

This allows users to hot-swap fresh sources on the fly and makes the process faster and less expensive than retraining a model with more datasets.

How RAG Is Being Used

The potential applications for RAG could be many times the quantity of available datasets, as retrieval-augmented generation allows users to literally conduct conversations with data repositories, opening up new types of experiences.

For instance, financial analysts might profit from an assistant connected to market data, and a generative AI model enhanced with a medical index could be an excellent aid for a physician or nurse.

Actually, practically any company may create knowledge bases from its technical or policy manuals, videos, or logs, which can improve LLMs and facilitate use cases like field or customer service, staff training, and developer productivity.

Companies including AWS, IBM, Glean, Google, Microsoft, NVIDIA, Oracle, and Pinecone are implementing RAG because of its wide range of potential.

The Beginning of Retrieval-Augmented Generation

NVIDIA created an AI Blueprint for virtual assistant development to aid customers in getting started. Businesses can utilize this reference architecture to begin developing a new customer-centric solution or to rapidly grow their customer care operations using generative AI and RAG.

NVIDIA NeMo Retriever, a set of user-friendly NVIDIA NIM microservices for large-scale information retrieval, and some of the most recent AI-building techniques are used in the blueprint. NIM makes it simple to deploy safe, high-performance AI model inferencing across workstations, data centers, and clouds.

All of these elements make up NVIDIA AI Enterprise, a software platform that speeds up the creation and implementation of production-ready AI while providing the stability, security, and support that companies require.

Additionally, there is a free hands-on NVIDIA LaunchPad lab for creating AI chatbots with RAG, enabling IT teams and developers to produce responses based on company data fast and precisely.

The NVIDIA GH200 Grace Hopper Superchip, with its 288GB of fast HBM3e memory and 8 petaflops of compute, is excellent for RAG workflows since it can provide a 150x speedup over utilizing a CPU. RAG workflows require vast amounts of memory and compute to move and analyze data.

Companies can construct a wide range of assistants that support their staff and customers by combining internal or external knowledge sources with a number of off-the-shelf or custom LLMs after they become comfortable with RAG.

RAG doesn’t need a data center. Thanks to NVIDIA software, which allows users to access a variety of programs even on their laptops, LLMs are making their debut on Windows PCs.

An example of a PC RAG program.

With RAG on a PC, users can link to a private knowledge source, such as emails, notes, or articles, to improve responses, and they can feel secure knowing that their data source, prompts, and response are all private and secure. Some AI models can now be run locally on PCs with NVIDIA RTX GPUs.

TensorRT-LLM for Windows accelerates RAG to get better results more quickly, as demonstrated in a previous blog.

The Background of RAG

The technique’s origins date at least to the early 1970s, when information retrieval researchers created what they dubbed question-answering systems—apps that retrieve text using natural language processing (NLP), originally on specific subjects like baseball.

The principles underlying this type of text mining have not changed much over time, but the machine learning engines that power them have expanded dramatically, making them more popular and practical.

With its logo of a smartly dressed valet, the Ask Jeeves service (now Ask.com) popularized question answering in the mid-1990s. IBM’s Watson rose to fame on television in 2011 after defeating two human champions handily on the Jeopardy! game show.

These days, LLMs are revolutionizing question-answering systems.

Perspectives From a London Laboratory

While Lewis was working for Meta at a new London AI lab and pursuing a doctorate in NLP at University College London, the groundbreaking 2020 article arrived. The team was looking for ways to fit more information into the parameters of an LLM and was using a benchmark it created to track its progress.

Lewis noted that the team “had this compelling vision of a trained system that had a retrieval index in the middle of it, so it could learn and generate any text output you wanted,” building on previous approaches and drawing inspiration from a paper written by Google researchers.

When the IBM Watson question-answering system won big on the Jeopardy game show on television, it became a household name!

The initial outcomes were very impressive when Lewis connected a promising retrieval system from another Meta team to the ongoing project.

It can be challenging to put up these workflows right the first time, so when I showed my boss, he remarked, “Whoa, take the win. This sort of thing doesn’t happen very often,” he said.

Lewis also acknowledges the significant contributions made by team members Douwe Kiela and Ethan Perez, who were then with Facebook AI Research and New York University, respectively.

When finished, the work—which was executed on a cluster of NVIDIA GPUs—showed how to increase the authority and credibility of generative AI models, and hundreds of articles have since mentioned it, expanding and amplifying the ideas in what is still a very active field of study.

The Operation of Retrieval-Augmented Generation.

This is an overview of the RAG process from an NVIDIA technical brief.

When users pose a question to an LLM, the AI model forwards the query to another model, which transforms it into a numeric form that can be read by machines; this numeric form of the query is sometimes referred to as a vector or an embedding.

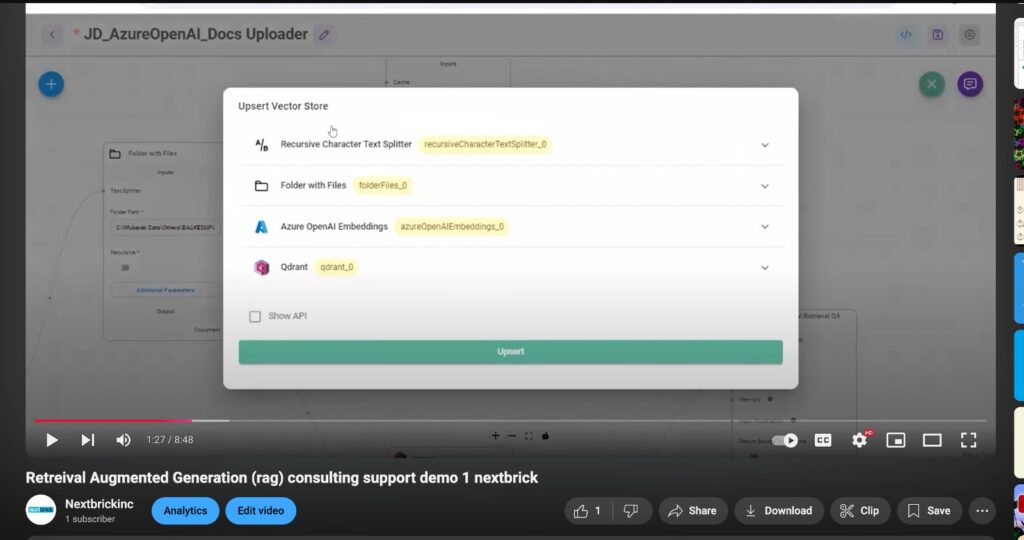

LLMs are combined with vector databases and embedding models in retrieval-augmented generation.

When a match or more matches are found, the embedding model collects the relevant data, translates it into human-readable words, and sends it back to the LLM. The embedding model then compares these numerical values to vectors in a machine-readable index of an accessible knowledge base.

The LLM then gives the user with a final response that incorporates the recovered words and its own answer to the query, maybe including references to the sources the embedding model discovered.

Maintaining Up-to-Date Sources

As new and updated knowledge bases become available, the embedding model continuously builds and updates machine-readable indices, also known as vector databases, in the background.

LLMs are combined with vector databases and embedding models in retrieval-augmented generation.

NVIDIA’s reference architecture for retrieval-augmented generation employs LangChain, an open-source toolkit that many developers find very helpful for chaining together LLMs, embedding models, and knowledge stores.

An explanation of a RAG process is given by the LangChain community.

Looking ahead, generative AI’s future rests on ingeniously connecting various LLMs and knowledge bases to produce new assistant types that provide reliable outcomes that people can validate.

Attend NVIDIA GTC, the international conference on AI and accelerated computing, which will take place online and in San Jose, California, from March 18–21, to learn about generative AI sessions and experiences.