What is retrieval-augmented generation? RAG is an AI framework for...

Generative AI

Home » Generative AI Consulting Services

Table of Contents

For Generative AI Consulting Services

Get in touch with us

Let's break ice

Email Us

Service Offering

AI Technology Consulting

Empower your organization with GenAI-based strategies as we guide the seamless integration of AI into your IT infrastructure. Unleash AI’s full potential to foster innovation and gain a competitive edge.

Generative AI Model Development

Elevate your business to unparalleled heights by harnessing our expertise in crafting tailored AI models for unique outputs. Explore new dimensions of innovation and stay ahead in the competitive landscape.

Model Integration and Deployment

Our generative AI expertise ensures the seamless integration and deployment of AI models, driving tangible results and optimizing productivity for sustainable growth. Make informed, data-driven decisions with advanced AI models.

MLOps

Ensure the smooth operation of NLP-based solutions throughout their lifespan with Nextbrick’s GenAI services. Support, monitor, optimize, and maintain model performance for continuous and efficient NLP solution functionality.

System Architecting

Leverage our generative AI architectural services to implement systems that yield exceptional and innovative outcomes. Harness the power of frameworks to transform your business and achieve outstanding results.

Model Finetuning

Refine and enhance the output quality and accuracy of your models swiftly. Integrate generative AI seamlessly into applications, achieving unparalleled levels of precision.

Our Generative AI Consulting Services

Use Case Identification and Feasibility Evaluation

Our team assists in identifying potential use cases for generative AI within your organization. We conduct feasibility studies to assess the viability and potential impact of implementing generative AI solutions, providing you with insights to make informed decisions.

Technology Assessment and Selection

We conduct in-depth assessment of diverse generative AI technologies and tools, including models such as GPT-4, Llama, PaLM 2 and BERT, and analyze their capabilities and compatibility with your organization’s specific requirements to ensure optimal selection and implementation

Compliance and Security Consultation

Leveraging our knowledge of critical regulations like GDPR, CCPA, and HIPAA, we preserve data privacy for your generative AI solution ensuring system compliance. This mitigates potential risks while maintaining utmost data integrity.

Data Engineering

We prepare your data for model training using advanced data engineering tools and techniques. And then by leveraging our ML expertise, we use this data to build meaningful custom models designed to perform specific tasks like predictive analysis.

Maintenance and Support:

Our team ensures the continued functionality, performance optimization, and adaptation of your AI solutions to meet changing needs. Whether it’s addressing updates, fine-tuning algorithms, or providing responsive technical support, our goal is to ensure your GenAI solutions consistently operate at peak efficiency.

Generative AI Integration

We work closely with your organization to ensure a smooth implementation of generative AI applications within your existing systems and processes. Our goal is to minimize disruptions and maximize the benefits of generative AI, enabling you to leverage its capabilities without obstacles or complications.

Generative AI Solution Development

We build robust generative AI solutions for diverse domains, from natural language generation for content creation to image synthesis for creative design and beyond, empowering businesses to streamline processes, gain actionable insights, and drive unprecedented efficiency.

Custom LLM Development

Our experts optimize LLMs specifically for your business. We start by selecting the right pre-trained model for your needs, prepare your proprietary data, and then employ fine-tuning techniques to train the model with your data, ensuring your custom LLM offers accurate domain specific responses

Nextbrick AI capabilities and expertise

AI Use Cases

- Improve customer experiences

- Chatbots and virtual assistants: Streamline customer self-service processes and reduce operational costs by automating responses for customer service queries through generative AI-powered chatbots, voice bots, and virtual assistants.

- Conversational analytics: Analyze unstructured customer feedback from surveys, website comments, and call transcripts to identify key topics, detect sentiment, and surface emerging trends.

- Personalization: Deliver better personalized experiences and increase customer engagement with individually curated offerings and communications.

- Content Moderation: Moderate large and complex volumes of user-generated to create safe online environments, protect your brand, and minimize moderation costs.

- Identity Verification: Enable online user identity verification powered by AI to onboard new users in seconds, grow and protect your customer base, reduce fraud, and lower user verification costs.

- Boost employee productivity

- Employee assistant: Improve employee productivity by quickly and easily finding accurate information, get accurate answers, summarize and create and summarizing content through a conversational interface.

- Code generation: Accelerate application development with code suggestions based on the developer’s comments and code.

- Automated report generation: Generative AI can be used to automatically generate financial reports, summaries, and projections, saving time and reducing errors.

- Accelerate process optimization

- Document processing: Improve business operations by automatically extracting and summarizing data from documents and insights through generative AI-powered question and answering.

- Media insights: Create new insights from video, audio, images and text by applying machine learning to better manage and analyze content. Automate key functions of the media workflow to accelerate the search and discovery, content localization, compliance, monetization, and more.

- Supply chain optimization: Improve logistics and reduce costs by evaluating and optimizing different supply chain scenarios.

- Sales content creation: Generate personalized emails, messages based on a prospect’s profile and behavior, improving response rates. Generate sales scripts or talking points based on the customer’s segment, industry and the product or service.

- Product development: Generative Al can generate multiple design prototypes based on certain inputs and constraints, speeding up the ideation phase, or optimize existing designs based on user feedback and specified constraints.

- Code generation: Accelerate application development with code suggestions based on the developer’s comments and code.

- Personalization: Deliver better personalized experiences and increase customer engagement with individually curated offerings and communications.

- Agent assist: Enhance agent performance and improve first contact resolution through task automation, summarization, enhanced knowledge base searches, and tailored cross-sell/upsell product recommendations.

- Marketing content creation: Create engaging marketing content, such as blog posts, social media updates, or email newsletters, saving time and resources.

- Report generation

Automatically generate financial reports, summaries, and projections, saving time and reducing errors. - Employee assistant: Improve employee productivity by quickly and easily finding accurate information, get accurate answers, summarize and create and summarizing content through a conversational interface.

- Chatbots and virtual assistants: Streamline customer self-service processes and reduce operational costs by automating responses for customer service queries through generative Al-powered chatbots, voice bots, and virtual assistants.

- Conversational analytics: Analyze unstructured customer feedback from surveys, website comments, and call transcripts to identify key topics, detect sentiment, and surface emerging trends.

- Planogram optimization: Dynamically update planograms based on new products, changing inventory levels, sales trends and competitor data.

- Intelligent advisory: With chatbots and call center assist, firms can automatically translate complex questions from internal users and external customers into their semantic meaning, analyze for context, and then generate highly accurate and conversational responses.

- Interpret medical images and documentation: Enhance, reconstruct, or even generate medical images like X-rays, MRIs, or CT scans, which can aid in better diagnosis.

- Content Moderation: Moderate large and complex volumes of user-generated content using ML. Sub Use Cases: Online content moderation, Brand safety, Protect PII and PHI information.

- Machine Translation: Localize content such as websites and applications for your diverse users, easily translate large volumes of text for analysis, and efficiently enable cross-lingual communication between users.

- Al-Enabled Contact Center: Resolve issues faster, personalize the customer experience, and improve operational efficiency in your contact center using Al. Sub Use Cases: Self-service, Real-time analytics, Post-call analytics, and Agent assist

- Forecasting: Accurately forecast sales, financial, and demand data to streamline planning and decision-making Sub Use Cases: Sales forecasting, Demand forecasting, and Price forcasting.

- Personalized recommendations: Analyze customer data to create customized promotions and personalized product recommendations for customers.

- Virtual try-ons: Generative Al can synthesize realistic images of people wearing different clothing items, enabling immersive virtual try-on experiences.

- Predictive Maintenance: Take proactive action and prevent problems before they occur, using the data collected from machine sensors and systems, under unique operating conditions, to automatically detect abnormal machine conditions as early as possible using ML.

- Ambient digital scribe: Automatically create transcripts, extract key details, and create summaries from clinician-patient interactions.

- Identity Verification: Enable identity verification using ML-powered facial biometrics. Sub Use Cases: Onboarding and authentication Workflows, Know your customer workflows, Account access.

- Fraud Detection: Automate the detection of potentially fraudulent online activities, such as payment fraud, fake accounts, account takeover, or promotion abuse, among others, using ML. Sub Use Cases: Payment fraud detection New account fraud, Account takeover, Promotion abuse, Fake or abusive reviews and ID verification.

- Product descriptions: Generative Al can automatically generate unique, high-quality product descriptions and listings based on product data.

- Al-powered maintenance assistants: Generative conversational agents can be trained on product manuals, troubleshooting guides, and maintenance notes to deliver swift technical support to workers, reducing downtimes.

- Product design optimization: Generative Al can quickly generate and assess countless design options, helping manufactures find the most optimized, efficient, and cost-effective solutions.

- Automated research reporting: Generate documents or narratives based on drug discovery research dataset, such as proprietary scientific reports. For example, collate phase 1 clinical trials for a candidate therapeutic.

- Intelligent Health Assist: Enable payor agent assist, call report summarization, agent performance assessment for health care insurance providers.

- Real-time equipment diagnostics: By ingesting historical data, generative Al can diagnose equipment failures in real time and recommend maintenance actions like input adjustments, repairs, or likely spare parts.

- Increase the business value of unstructured content: Create on-demand structured data products (e.g., competitor maps, supply chain relationships, product & service catalogs) from large unstructured data sources such as emails, document repositories, and filings.

- Produce high-quality content at scale: Generate characters, animations, and visual effects tailored to specific themes, genres, or formats.

- Optimize pricing: Continuously run simulations to set optimal pricing for goods based on expiration dates, competition, location, and others.

- Visual Inspection: Inspect product stock, flow, or location using computer vision. Sub Use Cases: Video surveillance and detection, Visual damage estimation, Asset localization, Warehouse optimization.

- Workplace Safety: Ensure workplace safety in hazardous environments using computer vision.

- Al for IT Operations: Automate software and IT systems monitoring, optimization, and maintenance activities by alerting, providing prescriptive guidance, or acting autonomously using ML.

- Data augmentation: Generate synthetic data to train ML models, when the original dataset is small, imbalanced or sensitive.

- Media Intelligence: Maximize the value of media content such as search and discovery, content localization, compliance, and monetization by adding ML to media workflows. Sub Use Cases: Search and discovery, Subtitling and localization, Compliance and brand Safety, Content Monetization

- ML Modernization: Improve productivity, foster responsible Al, and improve security and governance by modernizing the ML development process. Sub Use Cases: ML model development, Foundation model (FM) development, Model test and release, Model activation.

- Intelligent Search: Locate accurate and useful information faster. Transform unstructured data into intelligence by understanding document content and relationships with ML. Sub Use Cases: Accelerate research and development, Minimize regulatory and compliance risks, Improve customer interactions, Increase employee productivity

- Automatic content tagging: Use generative Al to auto-tag and index massive media libraries for easier search and recommendation.

~ Case Studies~

Generative AI Case Studies

Understanding Retrieval Augmented Generation

LLMs are trained on a vast amount of textual data, and their capabilities are based on the knowledge they acquire from this data.

This means that if you ask them a question about data that is not part of their training set, they will not be able to respond accurately, resulting in either a refusal (Where the llm responds with “i dont know”) Or worse, a hallucination.

So, how can you build a genai application that would be able to answer questions using a custom or private dataset that is not part of the llm’s training data?

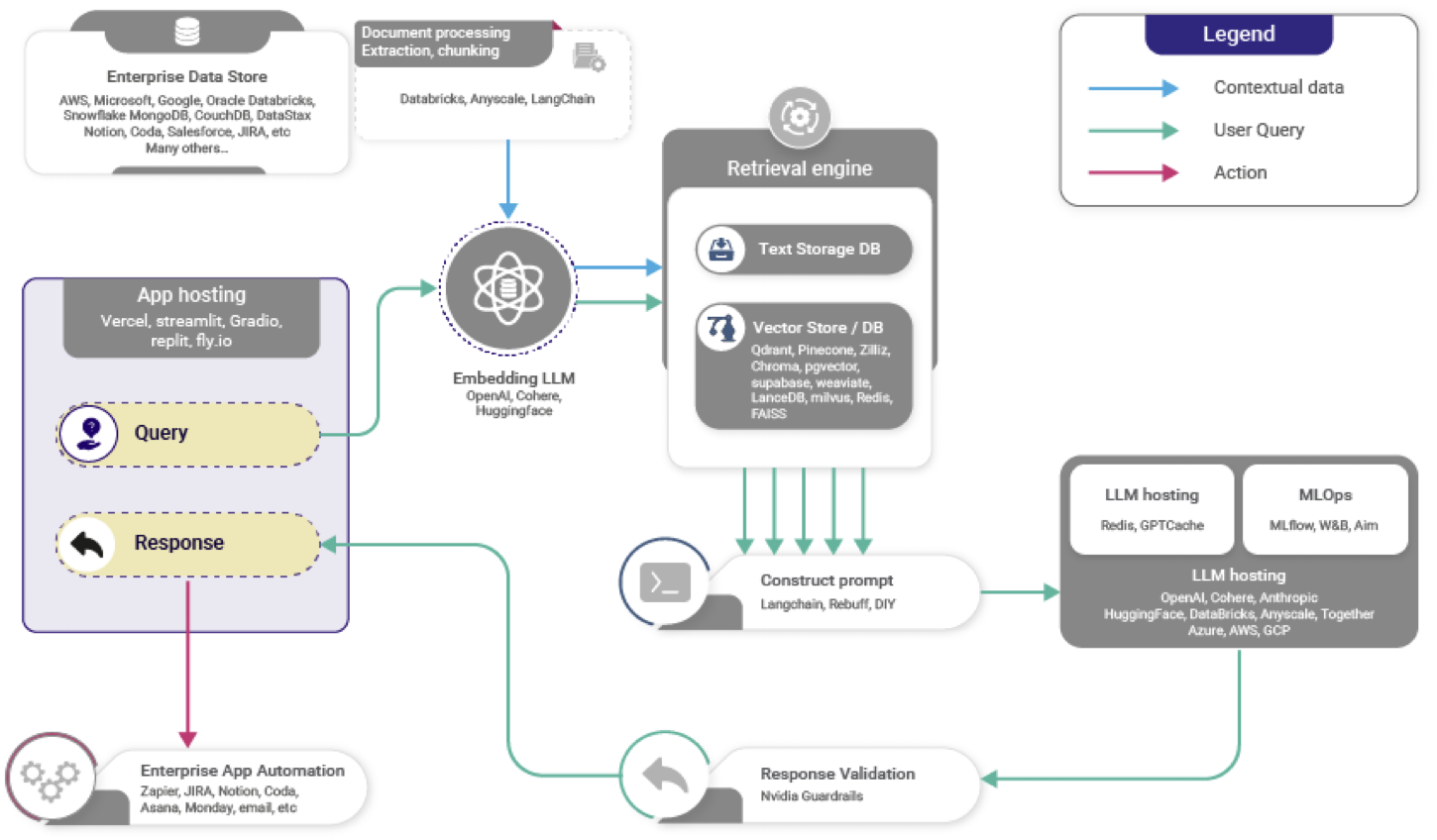

RAG Flow: A Step-by-Step representation

Data Ingestion

- Blue arrows show the flow of data from various sources (databases, cloud, etc.) for Retrieval Augmented Generation.

- Text-based GenAI applications process data, translating and extracting text as needed.

Text Processing

- Extracted text is divided into chunks and processed using Vectara’s Boomerang model to create vector embeddings.

Query-Response Flow

- Green arrows illustrate the user query and response process.

- Query encoding and approximate nearest neighbor search retrieve relevant text chunks for response.

Prompt and Generation

- Relevant text chunks construct a comprehensive prompt for generative language models like OpenAI.

- Language models ground responses in provided facts, avoiding hallucination.

Validation and User Response

- Optionally, responses can undergo validation before being sent back to the user.

Enterprise Automation (Optional)

- Red arrow indicates the optional step of taking action based on trusted responses, like automated tasks in enterprise systems.

~ Testimonials ~

Here’s what our customers have said.

Empowering Businesses with Exceptional Technology Consulting

~ Our Clients ~

~ Knowledge Hub ~

Our Latest Blogs

retrieval augmented generation

Retrieval-augmented generation Retrieval Augmented Generation (RAG) is a technique that...

Vector Search: Google’s Powerful Solution for AI-Driven Applications

Google has emerged as a leader in vector search technology,...